Policy Network-Based Dual-Agent Deep Reinforcement Learning for Multi-Resource Task Offloading in Multi-Access Edge Cloud Networks

2024-04-28FengChuanZhangXuHanPengchaoMaTianchunGongXiaoxue

Feng Chuan ,Zhang Xu ,Han Pengchao ,Ma Tianchun ,Gong Xiaoxue

1 School of Communications and Information Engineering,Chongqing University of Posts and Telecommunications,Chongqing 400065,China

2 Institute of Intelligent Communication and Network Security,Chongqing University of Posts and Telecommunications,Chongqing 400065,China

3 Key Laboratory of Big Data Intelligent Computing,Chongqing University of Posts and Telecommunications,Chongqing 400065,China

4 School of Information Engineering,Guangdong University of Technology,Guangzhou 510006,China

Abstract: The Multi-access Edge Cloud(MEC)networks extend cloud computing services and capabilities to the edge of the networks.By bringing computation and storage capabilities closer to end-users and connected devices,MEC networks can support a wide range of applications.MEC networks can also leverage various types of resources,including computation resources,network resources,radio resources,and location-based resources,to provide multidimensional resources for intelligent applications in 5/6G.However,tasks generated by users often consist of multiple subtasks that require different types of resources.It is a challenging problem to offload multiresource task requests to the edge cloud aiming at maximizing beneftis due to the heterogeneity of resources provided by devices.To address this issue,we mathematically model the task requests with multiple subtasks.Then,the problem of task offloading of multi-resource task requests is proved to be NPhard.Furthermore,we propose a novel Dual-Agent Deep Reinforcement Learning algorithm with Node First and Link featuresbased on the policy network,to optimize the beneftis generated by offloading multi-resource task requests in MEC networks.Finally,simulation results show that the proposed algorithm can effectively improve the benefti of task offloading with higher resource utilization compared with baseline algorithms.

Keywords: benefti maximization;deep reinforcement learning;multi-access edge cloud;task offloading

I.INTRODUCTION

As emerging network services and applications like Augmented/Virtual Reality (A/VR) [1,2] and the Internet of Things (IoT) [3,4] gain popularity,the need for robust data transmission and processing capabilities continues to grow.These applications typically require stringent Quality of Service (QoS)[5,6],such as lower latency,higher reliability,and lower cost compared to cloud computing.To address these challenges,multi-access edge computing[7,8] has emerged as a promising solution.By deploying edge servers [9] near Base Stations (BSs) at the network edge,Multi-access Edge Cloud (MEC)[10] brings computation and storage functions closer to users and the devices [11] they serve.Moreover,the MEC networks are characterized by a wide range of physical devices [5] utilizing diverse access technologies.Therefore,MEC networks can offer multidimensional resources dispersed across a large number of geographically distributed network devices,including Macro Base Stations(MBSs),Small-cell Base Stations (SBSs),and edge servers,each with varying hardware capabilities in terms of computation,communication,and memory.

However,many current studies tend to focus on limited types of resources,such as computation or storage resources.This can hinder the ability of MEC networks to support diverse and complex applications that require a combination of different types of resources.Traditional offloading decisions typically rely on simple indicators such as network latency,bandwidth,and available server resources to design offloading algorithms,which may not take into account the specifci requirements of task offloading [12].To address this issue,researchers are exploring new approaches to resource allocation and management in MEC networks.Intelligent offloading decisions leverage Machine Learning (ML) and other Artifciial Intelligence(AI)techniques to consider a wide range of factors,including the specifci characteristics and requirements of the task,available resources on different servers,and the historical performance of different offloading strategies.By accommodating these complex factors,intelligent offloading decisions can optimize the offloading process in ways that traditional methods cannot.Designing intelligent offloading decisions in MEC networks with multi-dimensional resources for specifci task requests is also challenging work.

Furthermore,the diverse task requirements of users pose a signifciant challenge for task offloading in MEC networks.A single task request may consist of multiple subtasks [13].In MEC networks,multiresource task requests,such as video processing applications,are common.Video processing entails various subtasks,each with unique resource requirements.For instance,video decoding relies heavily on Central Processing Unit (CPU) resources,while video encoding necessitates both CPU power and network bandwidth.Additionally,subtasks like object detection or video transcoding may benefti from specialized accelerators or Graphics Processing Units(GPUs)for optimal performance.Thus,a video processing application within the MEC networks exemplifeis a multi-resource task request,as it encompasses subtasks that rely on diverse resources such as CPU,network bandwidth,and dedicated accelerators or GPUs.In terms of resource allocation,different task types typically demand distinct types and quantities of resources,which are determined by their specifci characteristics and requirements.

Effciient task offloading in heterogeneous 5G networks is crucial for providing effective intelligent services.Therefore,there is a growing need to explore how MEC networks can leverage their broad types of resources to support a wide range of applications that require different types of resources.In our work,to offer services to users,service providers make use of the physical resources of infrastructure providers.Task offloading by the user beneftis the service providers,who pay the infrastructure provider for the cost of the resources.This paper makes the following contributions:

• In MEC networks,the multi-resource task request consists of multiple subtasks,based on which,multiple edge servers can collaborate and provide support for different types of resources.

• Moreover,we have formulated the problem of task offloading with multiple types of requested resources as a mathematical model to maximize the total benefti.We prove that the multi-resource task offloading problem is NP-hard.

• Specifcially,we propose a novel policy networkbased Dual-Agent Deep Reinforcement Learning(DRL) algorithm with Node First and Link features(NF-L-DA-DRL).Simultaneously,the lowdimensional link-state space is utilized to make path selection decisions in the high-dimensional space.The NF-L-DA-DRL algorithm is designed to optimize the beneftis generated by offloading multi-resource task requests in MEC networks.

• We conduct numerous simulations to verify the effectiveness of the proposed NF-L-DA-DRL algorithm under different scales of physical networks.Additionally,we set up different task request scenarios to verify the scalability of the proposed NF-L-DA-DRL algorithm in large-scale physical networks.Our results demonstrate that the proposed NF-L-DA-DRL algorithm outperforms other algorithms in terms of total benefti and the blocking probability of task requests.

The remainder of this paper is organized as follows.The related works are reviewed in Section II.Section III presents the system models,based on which the problem of task offloading with multi-resource task requests in MEC networks is formulated and analyzed.In Section IV,we propose the policy network-based dual-agent DRL algorithm for multi-resource task offloading.Section V presents the simulation results and we conclude our paper in Section VI.

II.RELATED WORK

Recent research has focused heavily on addressing issues in task offloading,driven by the tremendous potential of computation-intensive and latency-sensitive applications [2].In this paper,we provide a comprehensive review of existing research in the literature from both traditional and intelligent offloading decision-making perspectives.

2.1 Traditional Offloading Decision-Making

Computation and memory resources are primarily centralized in edge servers [14],whereas communication resources are primarily distributed across network edge nodes [15].However,allocating communication resources alone is often insuffciient to meet the demands of computation-intensive and delay-sensitive applications.Current research has primarily focused on pairwise joint optimization of computation,communication,or caching issues,utilizing conventional optimization methods to simultaneously optimize task offloading and resource allocation.

For instance,the objective is to minimize computation delay while satisfying long-term energy consumption constraints in [16].The authors proposed a Lyapunov-based optimization approach to address joint service caching and task offloading in dense cellular networks.In [17],the authors explored a collaborative task offloading and resource allocation optimization scheme aimed at maximizing system utility.The optimization problem is decoupled into subproblems,and game theory and Lagrangian multiplier methods are utilized for offloading decision-making and resource allocation.In [18],the authors investigated cost minimization by exploring the offloading of multiple subtasks to collaborative edge-cloud networks.They formulated the task offloading problem as an integer linear programming and designed the reconfgiuration algorithm to minimize the total offloading cost of tasks.However,these studies overlook the joint optimization of multi-dimensional resources in MEC networks.Moreover,as the number of types of required resources by intelligent applications continues to grow,exact algorithms may be insuffciient to obtain optimal solutions,given the diversity of applications and complexity of wireless networks.Additionally,heuristic algorithms with high time complexity can result in signifciant costs associated with fniding feasible solutions.

2.2 Intelligent Offloading Decision-Making

Unlike traditional heuristic algorithms,Reinforcement Learning(RL)[19,20]optimizes long-term goals and exhibits superior generalization.However,learning the entire system in RL can be time-consuming,making it unsuitable for large-scale networks.Deep Learning (DL) [21,22] excels at representing the environment but has weak decision-making capabilities.To integrate the strengths of both approaches,DRL[23,24]combines RL’s decision-making abilities with DL’s perception abilities.Task offloading and resource allocation problems in MEC networks are non-convex and challenging to solve,with heuristic methods often exhibiting limitations under multi-constraint conditions.DRL[25]offers a promising and effciient solution,allowing for appropriate action selection based on system states in MEC networks.

In [26],the authors utilized the Q-learning method to minimize energy consumption for all users in the single BS and multi-user offloading scenario,while incorporating task delay constraints and resource requirements for heterogeneous computation tasks.Similarly,in [27],the authors used a Deep Qlearning(DQL)algorithm to address the weighted sum of delay and energy-effciient minimization issues for multiple users facing a single-edge server.However,these studies are limited to the offloading scenario of a single BS and multiple users and do not address the offloading scenario of multiple BSs and multiple users.

In [28],the authors utilized a combination of deep neural networks and DQL to address optimization issues.The authors considered the influence of task delay and energy consumption on task offloading,transformed the offloading problem into a multiobjective optimization problem,and devised a deep meta-reinforcement learning method to resolve the issue.In[29],the authors aimed to minimize the sum of delay cost and energy consumption for all terminal devices in the multi-user wireless MEC system.The author proposed an adaptive framework based on DQN to address the issues of task offload and resource allocation.The value-based DRL algorithm can suffer from a limited number of discretized action samples,resulting in deviations in learned actions and suboptimal performance in task offloading and resource allocation.

In the context of single-agent DRL methods,[30]focused on minimizing delay by exploring a DRL algorithm that optimizes content caching,task offloading,and resource allocation simultaneously.In[31],the authors aimed to optimize offloading and edge caching through the design of a vehicle-mounted network architecture capable of intelligently managing computation and caching resources.Although the single-agent DRL method can facilitate offloading decision-making by collecting all relevant system state information,the method may encounter significant computation pressure and long solution times as the network scale expands,making it challenging to identify feasible solutions within reasonable timeframes.

The existing literature on offloading research primarily focuses on a limited range of resources available in edge servers,such as computing or storage resources.This narrow focus may hinder the ability of MEC networks to support complex applications that require a combination of different resource types.Furthermore,current work primarily revolves around task requests that involve fewer resource types,despite the increasing variations in intelligent applications.

Then,certain offloading approaches neglect the collaboration potential among edge servers,assuming that users can only offload tasks to a specifci nearby server.However,this oversight can result in resource imbalances and underutilization due to server heterogeneity and varying task requests.By promoting collaboration between edge servers,users can offload multi-type resource tasks,allowing each server to handle more tasks effciiently.

Traditional offloading decisions primarily consider indicators like network delay,bandwidth,and server resources.However,they often neglect the specifci requirements of offloading tasks,especially subtasks with different resource needs.As intelligent applications demand a wider range of resource types,the solution space for mathematical models grows substantially.By incorporating machine learning and other AI techniques,intelligent offloading decisions can encompass task-specifci characteristics and resource availability across multiple servers,providing a more comprehensive approach.

DRL plays a key role in agent response to rewards or penalties,habit formation,and effciient identifciation of optimal task offloading solutions.However,the existence of multi-type resource requests in tasks introduces challenges in constructing the state space for DRL and defniing suitable reward functions.These challenges are aimed at streamlining the optimization of neural network parameters and improving the effectiveness of neural network training.

Therefore,it’s essential to effciiently allocate multiple types of resources across the edge nodes and links in MEC networks.We comprehensively consider multi-resource task requests consisting of multiple subtasks,aiming to design an effciient intelligent task offloading strategy to maximize the total benefti in MEC networks.

III.SYSTEM MODELS AND PROBLEM FORMULATION

In this section,we introduce the MEC networks and task request models.Then we describe the multiresource task offloading problem which is proven to be NP-hardness.

3.1 Multi-Access Edge Cloud Networks

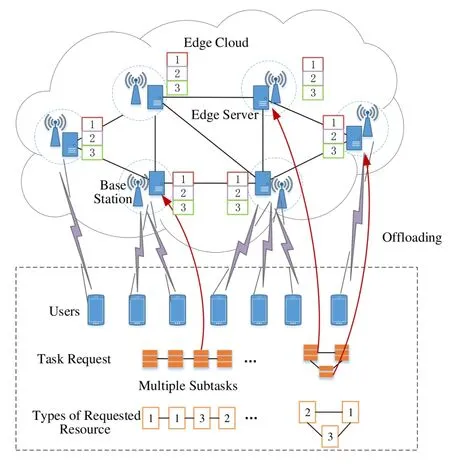

Models of MEC networks and task requests are shown in Figure 1.We use a directed graphGs(V s,Es) to model the physical networks,whereV sis a set of edge nodes,including BSs and edge servers.Esrepresents a set of physical links,including wired links between edge servers.Multiple edge servers can collaborate to serve task requests.In MEC networks,it is assumed that all users can access edge servers deployed on multiple nearby BSs through 5G wireless links.Each edge nodeis ∈V shasTtypes of resources,and the total amount of resources and remaining resources of typet ∈Tare denoted byrespectively.Each physical linkjs ∈Esis directed,we useusandvsto denote the source node and destination node of linkThen,we utilizeBjs,andLjsto represent the number of total bandwidth,the number of remaining bandwidth,and packet loss rate of linkjs,respectively.

Figure 1.Multi-resource task offloading in MEC networks.

In addition,the remaining bandwidthand packet loss rateof thekth paththat includes multiple links from source nodeasto destination nodebscan be obtained by Eqs.(1) and(2).

3.2 Task Request Model

A multi-resource task request with multiple subtasks is represented by a directed graphGr(V r,Er),whereV ris the set of requested subtasks,Eris the set of links between the requested subtasks.It is worth noting that the term “link” used here refers to a logical connection between subtasks,rather than a physical link.The set of all task requests is denoted byR,∀r ∈R.The number of resources of typetfor task requestir ∈V risThe amount of bandwidth resources requested by thejr ∈Erth link isBjr,and the maximum acceptable packet loss rate isLjr.The source and destination nodes of the linkjrare denoted byrespectively.

3.3 Multi-Resource Task Offloading Problem

In this subsection,the multi-resource task offloading problem with multiple subtasks is modeled asP1.For clarity,we summarize all the main notations and their defniitions in Table 1.The optimization goal is to maximize the total benefti of task offloading,as shown in Eq.(3).Service providers utilize the physical resources provided by infrastructure providers to offer services to their users.Service providers benefti from the subtasks offloaded by users and are responsible for paying the resource cost to the infrastructure provider.In Eq.(3),the offloading benefti is generated by all subtasks and the links between all subtasks.

Table 1.Summary of main notations.

The task offloading of multi-resource task requests is subject to the constraints of Eqs.(4)and(5),where one subtask in the multi-resource task request can only be offloaded to one edge server in MEC networks,as shown in Eq.(4).Equation (5) indicates that,in a multi-resource task request,a subtask can only request one type of resource on the edge server.Equation(6)defnies the constraint on thetth type resource capacity of edge servers.Specifcially,the total amount of thetth type of resource capacity occupied by all task requests cannot exceed the total amount of thetth type of resource capacity available in MEC networks.In addition,the link allocation between subtasks in the task request is constrained by Eqs.(7)-(10).Each link in a multi-resource task request with multiple subtasks can only select one physical link in one direction in MEC networks,as shown in Eq.(7).Equation (8)demonstrates flow conservation,meaning that the total incoming flow to each edge server is equal to the total outgoing flow.Equation (9) defnies the constraint on the bandwidth capacity of physical links in MEC networks.Specifcially,the total amount of link bandwidth occupied by all task requests cannot exceed the total link bandwidth capacity.The packet loss rate constraint of links in multi-resource task requests isshown in Eq.(10),which indicates that the packet loss rate of the allocated physical path is less than or equal to the tolerable packet loss rate of the link requested by the task request.

Based on the problemP1,the frist item of the objective function is a non-linear function,as shown in Eq.(3).With the exception of Eqs.(6)and(10),the formulated problem is a Mixed Integer Non-Linear Programming (MINLP) problem.As MINLP is known to be NP-hard,it follows that problemP1is also NPhard.

IV.POLICY NETWORK-BASED DUALAGENT DRL ALGORITHM FOR OFFLOADING

To solve the optimization problemP1,we propose a policy network-based dual-agent DRL algorithm for obtaining an optimized offloading and resource allocation scheme.By utilizing dual agents,the agents can coordinate their actions and share information,resulting in more effciient and effective decision-making.This can lead to improved system performance,as well as reduced risk of failure and better resource utilization.The agents can work together to achieve a common goal and adapt to changing environments and situations,making the system more flexible and adaptable.The overall framework of the NFLDADRL algorithm is illustrated in Figure 2.The algorithm presented in this section consists of three steps.

Figure 2.The overall framework of the NF-L-DA-DRL algorithm.

•Feature Extraction.The node state and link state features are extracted from the MEC networks and input to the dual agents separately.

•Action Decisions.The dual-agent output node actions and path actions through a series of layers,including the input layer,convolution layer,Softmax layer,fliter layer,and output layer.The MEC networks update the network resource states based on the node and path actions and then provide new states to the agents.

•Evaluation.The evaluation function is used to assess the performance of the agent in the current state and action,and then update the agent’s parameters based on the reward obtained from the function.By iteratively interacting with the MEC network environment,the dual-agent seeks to maximize the total benefti of the system.

4.1 NF-L-DA-DRL Framework

By using policy networks in task offloading,several advantages can be gained.Policy networks are capable of learning the optimal policy for offloading tasks based on the current state of the system,leading to more effciient and effective decision-making.They can explore the action space more effciiently,which can result in better convergence and improved system performance.Policy networks also have the ability to generalize well to new situations and environments.Additionally,policy networks can improve the stability of the learning process and converge to the optimal policy faster and more reliably than other methods.

The NF-L-DA-DRL algorithm utilizes a policy network architecture and follows a subtask offloading priority approach,followed by link allocation.The algorithm includes two agents: one for subtask offloading and the other for link assignment between subtasks.These agents cooperatively complete the task offloading process.The algorithm leverages a lowdimensional link state space to select path actions in a high-dimensional space,which explains the abbreviation of the algorithm as NF-L-DA-DRL.

Subtask Offloading Agent.When the subtask is offloaded,the node feature matrix composed of all edge node features in MEC networks is collected,and then input into the subtask offloading agent,and the subtask offloading agent outputs the probability that all edge nodes are selected.Next,we select the edge node with the highest probability to place subtasks until all subtasks in the task request are offloaded and calculate the subtask offloading rewards.It is important to note that edge nodes in MEC networks can offer multiple types of resources,and each subtask in the task request can choose from different types of resources.As shown in the subtask offloading agent in Figure 2,multiple layers of different types of resources are presented.Each resource layer corresponds to a particular type of resource state in each edge node.During the node state feature extraction process,the state of each resource type is input into its corresponding resource layer.

Link Assignment Agent.When allocating links for the task request,the link characteristic matrix consisting of all link characteristics in MEC networks is collected.The link characteristic matrix is then input into the link assignment agent,which outputs the probability of selecting each physical path.The physical path with the highest probability is selected for allocation,and this process is repeated until all links in the task request have been allocated.Finally,the rewards for link allocation are calculated.

It is important to note that the space of link states is low-dimensional,while the state space of paths is high-dimensional.Using the high-dimensional path state space to select the path action directly would result in a signifciant delay in obtaining a determined action.To address this issue,the link assignment agent maps the high-dimensional path-action space to the low-dimensional link state space,which speeds up the decision time of the agent.Furthermore,the link assignment agent compensates for the loss of accuracy resulting from the mapping from low-dimensional to high-dimensional space by increasing the depth of the convolutional layer.

Evaluation.The evaluation function is used to calculate the reward for each state and action,which assesses the performance of the current agent in a given state and action.The subtask offloading agent and link assignment agent are then updated using the policy gradient method to adjust their parameters based on the rewards obtained.

4.2 Environmental States

4.2.1 Node Features

To enable the agent to learn a better subtask offloading strategy in MEC networks that contain multiple types of resources,we extract four node features including node remaining resource,node betweenness centrality,node feature vector centrality,and node connection centrality.These features can more accurately describe the state of edge nodes,as shown in Eqs.(11)-(14).

Equation (11) represents the amount of remaining resources for edge nodes.Equation (12) represents node betweenness centrality,which measures the number of times the edge node serves as a bridge for the shortest path between two other edge nodes.The betweenness centrality of a node increases as it serves as an intermediary for more shortest path routes.In Eq.(12),ηas,bsrepresents the number of shortest paths between edge nodesasandbs,as ≠bs.ηas,bs(is)indicates whether the shortest path betweenasandbspasses through nodeis.If it does,the value is 1;otherwise,the value is 0.The eigenvector centrality of a node is shown in Eq.(13).Eigenvector centrality measures the importance of a node based on the importance of its neighbors.The more important a node connected to a given node is,the more important the given node itself becomes.In Eq.(13),λis a constant.Aas,isindicates that ifasis a neighbor node ofis,the value is 1;otherwise,the value is 0.Furthermore,the node connection centrality is expressed by Eq.(14),which measures the distance from edge nodeisto all other nodes in MEC networks.Among them,dis,asrepresents the hop count of the shortest path between nodeisand nodeas.

4.2.2 Link Features

We extract four features of physical links including the remaining link bandwidth,packet loss rate,betweenness centrality,and remaining bandwidth mean differe nce.Ljsrepresents the packet loss rate of thejsth physical link,where∀js ∈Es.These features enable a more accurate description of the state of physical links in MEC networks.The features other than the packet loss rate are shown in Eqs.(15)-(17).

Equation(15)indicates the number of remaining resources for physical links.Equation (16) represents the betweenness centrality,which is used to measure the importance of links based on the number of shortest paths that pass through linkjs.ηas,bs(js)indicates whether the shortest path betweenasandbsincludes pathjs.If it does,its value is 1;otherwise,its value is 0.The mean difference of remaining bandwidth is calculated as the difference between the remaining bandwidth of linkiand the mean remaining bandwidth of all links in MEC networks,as demonstrated in Eq.(17).

4.3 Dual Agents

The NF-L-DA-DRL algorithm based on dual-agent DRL employs a policy network consisting of two agents: a subtask offloading agent and a link assignment agent.The policy network directly outputs the probability of selecting various available actions based on the current state of the environment.Through continuous interaction with the environment,RL dynamically adjusts the policy parameters,gradually guiding the decision-making process toward optimal solutions.In the RL model,a larger reward value indicates the effectiveness of the current action and encourages its continuation,while a smaller or negative reward value signals the need for adjustments as the current action proves to be incorrect.Furthermore,by deepening the convolutional layer,DRL enhances the accuracy of path selection in the link assignment stage.This improvement allows for more precise decision-making regarding the assignment of links.

4.3.1 Subtask Offloading Agent

The subtask offloading agent consists of the input layer,the convolutional layer,the Softmax layer,the flitering layer,and the output layer,as shown in Figure 2.

• The input layer reads the characteristic state matrixof all edge nodes related to thetth type of resources.First,collect the node characteristics in MEC networks to construct the characteristic matrixof theisth edge node related to thetth type of resources.includes the number of remaining resources of nodes,node betweenness centrality,node eigenvector centrality,and node connection centrality,as shown in Eq.(18).

Next,the feature matrices of all edge nodes are combined to create the node feature matrix of thetth type of resources in MEC networks.This matrix is then normalized using the deviation standardization method to obtain the normalized feature matrixwhere all values are between 0 and 1,as shown in Eq.(19).

• The convolution layer performs convolution operations on the normalized feature matrixusing the convolution kernel weight vectorω1and bias termb1.The ReLU function is an activation function that operates as a piecewise linear function.If the input value is greater than 0,the function returns the input value directly.If the input value is 0 or less,the function returns 0.represents the available resource vectors used by the convolutional layers to generate candidate actions.Thus,the convolution operation on the characteristic state matrix of the edge node related to thetth type of resources is shown in Eq.(20).

• The Softmax layer converts the available resource vectorof candidate actions into the corresponding probability of each action being selected,denoted asin Eq.(21).

• The flitering layer eliminates all actions that fail to meet the constraints of types of node resources,the number of node resources,node one-to-one offloading,and flow conservation.Subsequently,we obtain the available resource vector and the set of candidate actions for the updated action.After applying the fliter layer,the output layer sets the probability of any actions that do not meet the constraints to 0.It then recalculates the probabilities to obtain a new probability distributionas shown in Eq.(22).Finally,the probabilities for all actions are outputted,and the action with the highest probability is selected for offloading subtasks.

• The reward function for the subtask offloading agent is set to the total benefti obtained from subtask offloading requested by a single task,as shown in Eq.(23).On the other hand,the loss function for the subtask offloading agent is given by Eq.(24).

4.3.2 Link Assignment Agent

The link assignment agent comprises the input layer,the convolutional layer,the Softmax layer,the flitering layer,and the output layer,as shown in Figure 2.

• The function of the input layer is to read the characteristic state matrixILof all physical links.Initially,the feature matrixof thejsth physical link is created by aggregating the link characteristics in MEC networks,which include the remaining link bandwidth,packet loss rate,link betweenness centrality,and the mean difference of remaining bandwidth,as shown in Eq.(25).

After collecting the feature matrix of all links,the link feature matrix of the MEC networks is formed and subsequently normalized using the dispersion standardization method to obtain the normalized link feature matrixIL,which ensures that the values inILfall within the range of 0 to 1,as shown in Eq.(26).

• The feature matrixILundergoes a convolution operation with the weight vectorω2representing the convolutional kernel and the bias termb2.The available resource vectors for generating action candidates through the convolutional layers are denoted byCL.Hence,the convolution operation is carried out on the characteristic state matrixILof all physical links,as illustrated in Eq.(27).

In Eq.(27),CLrepresents the resource vectors that are available for the candidate link actions generated by the convolutional layer.represents the elements in vectorCL,which contains a total of|K|elements.

The Softmax layer converts the available resource vectorCLof candidate actions into the corresponding probability of each action being selected,denoted asin Eq.(28).

• The flitering layer eliminates all path actions that fail to meet the constraints of link direction,flow conservation,bandwidth capacity,and packet loss rate.It sets the probabilities of these actions to 0 and calculates the updated probabilities and action set for all actions.The output layer reevaluates the action probabilities obtained from the flitering layer to generate a new probability distributionPL,as illustrated in Eq.(29).The probabilities for all actions are then outputted,and the action with the highest probability is selected for link assignment.

• The reward function for the link allocation agent is defnied as the total benefti obtained from link allocation between subtasks requested by a single task,as shown in Eq.(30).Eq.(31) represents the loss function for the link allocation agent.

4.4 NF-L-DA-DRL Algorithm

The pseudocode of NF-L-DA-DRL algorithm is shown in Algorithm 1.

Line 1 of the NF-L-DA-DRL algorithm iterates over all subtasks of a task request.Line 3 extracts the feature matrixof edge nodesisaccording to Eq.(18).Note that the type of resource to be selected in edge nodeisshould be determined by the type of resource requested by theirth subtask in the task request.Lines 2-4 gather the characteristics of all edge nodes of MEC networks and utilize Eq.(18)in Line 5 of the algorithm to construct the feature matrixof all edge nodes.

Line 11 begins to traverse the links between subtasks.Line 13 extracts the feature matrixof the physical linkjsaccording to Eq.(25).Lines 12-14 gather the characteristics of all physical links and use Eq.(26) in Line 15 of the algorithm to construct the feature matrix for all links in MEC networks.In Line 16,ILis inputted into the link assignment agent.Then,in Line 17,the path with the highest probability among physical links is selected for allocation,and network resources are updated in Line 18.The reward brought by path selection is calculated according to Eq.(30).Finally,in Line 21,the parameters of the subtask offloading agent and link allocation agent are updated to complete the offloading of a task request.Similarly,the NF-L-DA-DRL algorithm is executed for all tasks to complete the offloading of all task requests.

V.PERFORMANCE EVALUATIONS

In this section,we introduce the parameter settings of the physical networks and task requests,as well as the evaluation metrics and comparison algorithms used in the simulation.To evaluate the performance of our proposed method,we develop a simulation platform using Pycharm,which runs on a computer with an Intel i7-11370H 3.30 GHz CPU and 16GB memory.

5.1 Settings

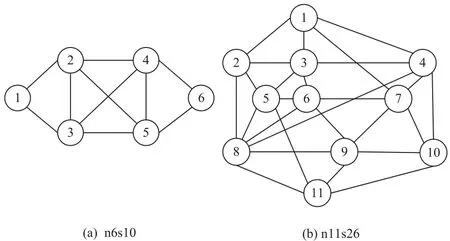

For our simulations,we consider two edge cloud network topologies:n6s10 and n11s26,which correspond to the small network topology with|V s|=6,|Es|=10,and the large network topology with |V s|=11,|Es|=26,respectively,as depicted in Figure 3.Each node can provide three types of resources,with each type having a capacity of 10000.The cost unit price and selling unit price of the resource for each node are set to[0.3,0.2,0.1]and[0.5,0.4,0.3],respectively.The total bandwidth of links is set to 10000.The packet loss rate of a link is randomly generated in the range of[0,0.4].The cost unit price and selling unit price of link bandwidth resources are set to 0.1 and 1,respectively.

Figure 3.Network topologies used in the simulation.

For the n6s10 topology,we set the number of subtasks in each task in[2,4]randomly when the number of subtasks in each task is not specifcially described.For the n11s26 topology,the task requests are randomly generated with the number of subtasks ranging from 2 to 6.The minimum number of links is equal to the number of subtasks minus 1,and the maximum number of links is given by |V r|(|V r|-1)/2.Each subtask in the task requests requires three types of resources,with the amount of each type ranging from 50 to 150.The amount of requested link bandwidth is set randomly in [50,150].The tolerable packet loss rate of the requested link ranges from 0.3 to 0.5.

In the DRL part,we confgiure the following parameters: the learning rate is set to 0.01,the discount factor is set to 0.95,and the depth of the convolutional layer is set to 20.

The proposed NF-L-DA-DRL algorithm is compared with several approaches as follows.

• NF-L-SA-DRL [32]: using a single agent to sequentially perform subtask offloading and link allocation.The feature state matrix comprises both node and link features,which are the same as those used in the NF-L-DA-DRL algorithm.

• NF-P-SA-DRL[33]:using a single agent for subtask offloading and link allocation,but with a feature state matrix that includes not only node features but also path features such as remaining bandwidth,packet loss rate,betweenness centrality,and mean difference of remaining bandwidth for each path.

• NF-P-DA-DRL[34]:employing two agents to offload tasks in a sequence of subtask offloading and link allocation,similar to the NF-L-DA-DRL algorithm.However,when assigning links,the link allocation agent considers path characteristics such as remaining bandwidth,packet loss rate,betweenness centrality,and mean difference of remaining bandwidth for each path.

• LF-L-SA-DRL [35]: using a single agent to offload subtasks and assign direct links between the newly offloaded subtask and all previously completed subtasks.This process is repeated until the direct links between the last offloaded subtask and all previously offloaded subtasks are allocated,completing the task offloading.The feature state matrix of the input agent for MEC networks comprises both node and link features,which are the same as those used in the NF-L-DA-DRL algorithm.

• LFPSA DRL [32]: using a single agent to offload tasks by alternately offloading subtasks and links,similar to the LFLSA DRL algorithm.However,the feature state matrix of the input agent for MEC networks includes both node and path features,which are the same as those used in the NFPSA DRL algorithm.

• LF-L-DA-DRL: employing two agents to offload tasks by alternately offloading subtasks and links,similar to the LF-L-SA-DRL algorithm.The feature state matrix of the input agent for MEC networks comprises both node and link features,which are the same as those used in the NF-L-DA-DRL algorithm.

• LF-P-DA-DRL:using two agents to offload tasks by alternately offloading subtasks and links,similar to the LF-L-SA-DRL algorithm.However,the feature state matrix of the input agent for MEC networks comprises both node and path features,which are the same as those used in the NF-P-DA-DRL algorithm.

The performance metrics used to evaluate the proposed algorithm are listed below.

•Total benefit.One of the evaluation metrics used in this section is total benefti,as shown in Eq.(3).

•Blocking rate.The blocking rate is defnied by Eq.(32),which represents the blocking rate of multi-type task requests with multiple subtasks.

•The average node resource utilization.Equation (33) displays the average node resource utilization rate for thetth type of resources.

•The average bandwidth resource utilization.Equation(34)displays the ratio of the bandwidth allocated to task requests over all the bandwidth resources.

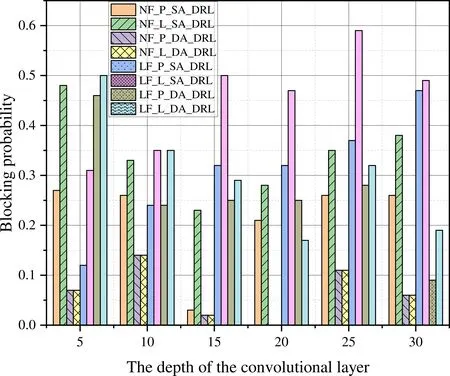

5.2 Performance Analysis Based on Different Convolutional Layer Depths

In actual network topologies,the number of paths is often much greater than the number of links.As a result,task offloading schemes based on path features face a high-dimensional feasible solution search space,which can result in considerable time costs.Furthermore,the scale of the network topology expands,which makes fniding an optimal offloading solution within a short period of time challenging due to the signifciantly increased number of paths.

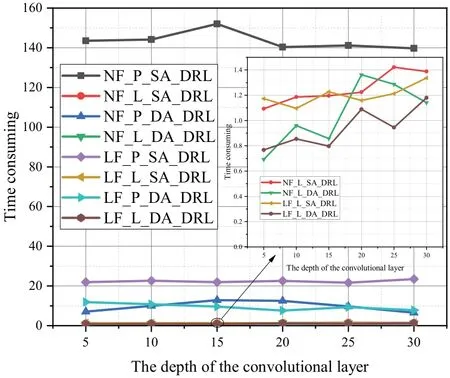

Figure 4 illustrates the time consumption of different algorithms based on the small topology n6s10.The fgiure clearly shows that the time consumption of the four algorithms based on path features -NF-P-SA-DRL,NF-P-DA-DRL,LF-P-SA-DRL,and LF-P-DA-DRL -is relatively high.Furthermore,the NF-P-SA-DRL algorithm has the highest time consumption,with a maximum of approximately 152.01564 seconds.However,the time consumption of the four algorithms based on link features -NF-L-SA-DRL,NF-L-DA-DRL,LF-L-SA-DRL,and LF-L-DA-DRL-generally remains in the range of approximately 0.7-1.4 seconds.It is evident that algorithms based on link features can signifciantly improve the effciiency of the offloading algorithm.

Figure 4.The time consuming of different algorithms for task requests with a random number of subtasks in n6s10.

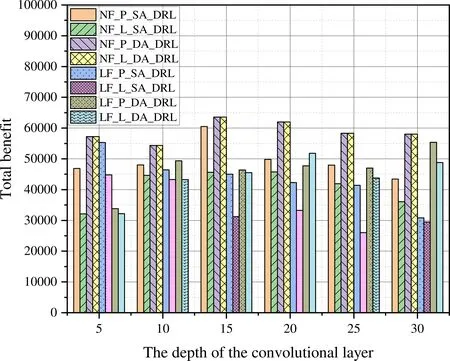

However,as shown in Figure 5,the algorithms using link features generate slightly lower total beneftis than the algorithms using path features.This tradeoff between benefti and time effciiency is the cost of improving time effciiency.To offset the decline in the benefti,we adopt a dual-agent offloading scheme,which generates a higher total benefti than the singleagent scheme.In the dual-agent solution,the total benefti based on link features is lower than the benefti based on path features.Additionally,we increase the depth of the convolutional layer to further improve the total benefti.However,determining the optimal depth of the convolutional layer involves a trade-off between depth and effciiency.For instance,with a depth of 20 for the convolutional layer,the total benefti of the NF-P-DA-DRL algorithm is 61968.2968,while the total benefti of the NF-P-SA-DRL algorithm is 49841.2187,representing a 24.33% increase in the total benefti of the NF-P-DA-DRL algorithm over the NF-P-SA-DRL algorithm.At this depth,the total benefti of the NF-L-DA-DRL algorithm is also 61968.2968,which is the same as that of the NF-P-DA-DRL algorithm,indicating that the cost incurred by using the link features is minimal.However,when the depth of the convolutional layer is set to 15,the total benefti of the NF-L-DA-DRL algorithm is about 63589.5859,which is the highest among all depths tested.

Figure 5.Total benefit of different algorithms for task requests with a random number of subtasks in n6s10.

If we consider the blocking rate as shown in Figure 6,the NF-L-DA-DRL algorithm has a blocking rate of 0 when the convolutional layer depth is 20,and a blocking rate of 0.02 when the convolutional layer depth is 15.Taking all factors into consideration,a convolutional layer depth of 20 is the most appropriate choice.At this depth,the total benefti is the second highest,lower but only slightly than the highest,and the blocking rate is the lowest.

Figure 6.Blocking probability of different algorithms for task requests with a random number of subtasks in n6s10.

5.3 Performance Analysis for Task Requests with Different Numbers of Subtasks

5.3.1 Number of Fixed Subtasks

Figure 7 illustrates the total benefti of task requests for different algorithms with a fxied number of subtasks.When the number of subtasks is 3 and the number of task requests reaches 210,the total benefti stabilizes.Similarly,when the number of subtasks is 4 and the number of task requests reaches 120,the total benefti stabilizes.Furthermore,when the number of subtasks is 3 and the number of task requests reaches 300,the NF-L-DA-DRL algorithm yields approximately 5.15%higher total benefti than the NF-L-SA-DRL algorithm,13.14%higher than the LF-L-DA-DRL algorithm,and 20.73%higher than the LF-L-SA-DRL algorithm.Because the NF-L-DA-DRL algorithm uses dual agents,subtask offloading and link allocation are split into two agents for parallel learning.This approach signifciantly reduces the learning diffciulty of the agents and accelerates convergence to the optimal subtask offloading and link optimization results.Thus,the NF-L-DA-DRL algorithm yields the highest total benefti.

Figure 7.Total benefit of different algorithms for task requests with a constant number of subtasks in n11s26.

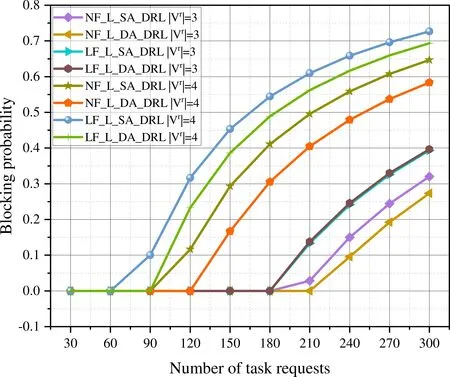

When the number of subtasks is 3 and the number of task requests reaches 300,the NF-L-DA-DRL algorithm exhibits the lowest blocking rate compared to the other algorithms in Figure 8.The LF-L-SA-DRL algorithm employs a single agent to perform task offloading.Once the offloading is completed,the agent receives a comprehensive reward and loss function for both subtask offloading and link allocation and updates its parameters accordingly.Because single-agent learning is less effciient than dual-agent learning,the training complexity is higher.Consequently,the LF-L-SA-DRL algorithm exhibits the highest blocking rate when the number of subtasks is 3 or 4,while the NF-L-DA-DRL algorithm has the lowest blocking rate.

Figure 8.Blocking probability of different algorithms for task requests with a constant number of subtasks in n11s26.

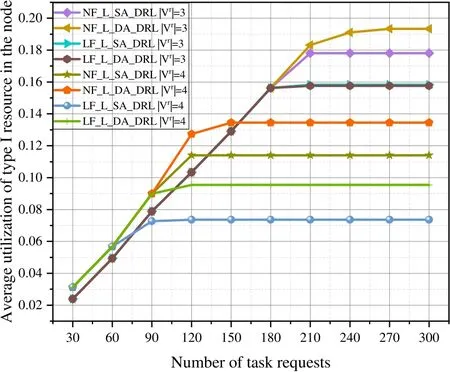

Figure 9 illustrates the average link resource utilization of various algorithms with a fxied number of subtasks for each task.Even under the same algorithm,the average link resource utilization rate exceeds when the number of subtasks is 4 compared to when it is 3.Since the blocking rate of the NF-L-DA-DRL algorithm is the lowest and the number of successfully offloaded task requests is the highest in Figure 8,the average link resource utilization rate of the NF-L-DA-DRL algorithm is also the highest,which is approximately 20.31% higher than that of the LF-L-DA-DRL algorithm.

Figure 9.Average utilization rate of link resource of different algorithms for task requests with a fixed number of subtasks in n11s26.

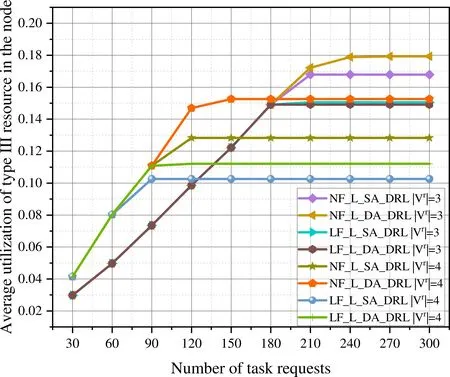

Figure 10.Average utilization rate of type I resource in the node of different algorithms for task requests with a fixed number of subtasks in n11s26.

Figure 11.Average utilization rate of type II resource in the node of different algorithms for task requests with a fixed number of subtasks in n11s26.

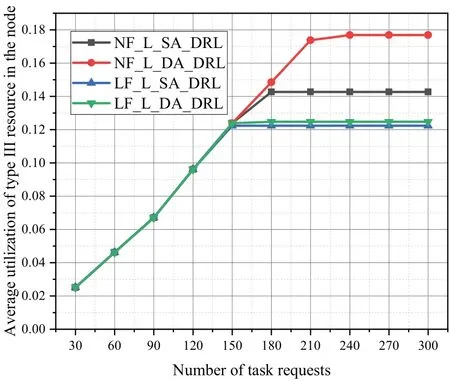

Figure 12.Average utilization rate of type III resource in the node of different algorithms for task requests with a fixed number of subtasks in n11s26.

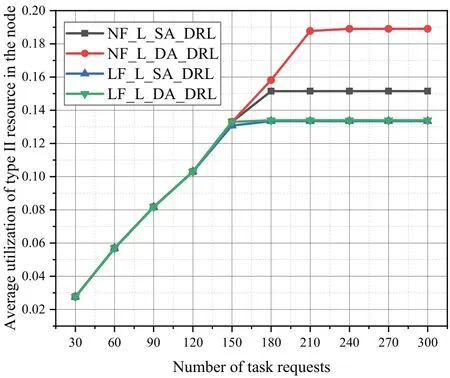

Based on the analysis of the average node resource utilization in Figures 10-12,when the number of subtasks is 3 and the number of task requests reaches 310,the average node resource utilization stabilizes.Additionally,the NF-L-DA-DRL algorithm exhibits the highest average node resource utilization.When the number of subtasks is 4 and the number of task requests reaches 120,the average node resource utilization tends to be stable,and the NF-L-DA-DRL algorithm has the highest average node resource utilization.Task requests with a small number of subtasks are easier to offload to the MEC networks,which allows MEC networks to accommodate more task requests with fewer subtasks.Regardless of whether the number of subtasks is 3 or 4,the NF-L-DA-DRL algorithm that utilizes dual agents exhibits higher average node resource utilization compared to the other algorithms.

5.3.2 Number of Randomly Generated Subtasks

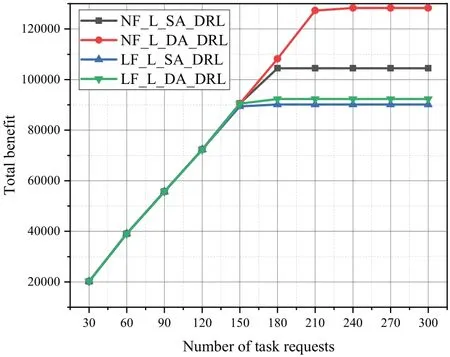

Figure 13 indicates that the NF-L-DA-DRL algorithm achieves the highest total benefti for task requests with a random number of subtasks among the various algorithms.The total benefti of the different algorithms is not signifciantly different when the number of task requests falls within the range of 30 to 150.When the number of task requests reaches 210,the total benefti of the NF-L-SA-DRL,LF-L-SA-DRL,and LF-L-DA-DRL algorithms stabilizes,while the total benefti of the NF-L-DA-DRL algorithm begins to reach a steady state.At this point,the NF-L-DA-DRL algorithm generates a total benefti close to 120,000,which is approximately 12.99% higher than that of the NF-L-SA-DRL algorithm,approximately 22.44%higher than that of the LF-L-DA-DRL algorithm,and approximately 26.42% higher than that of the LF-L-SA-DRL algorithm.

Figure 13.Total benefit of different algorithms for task requests with a random number of subtasks in n11s26.

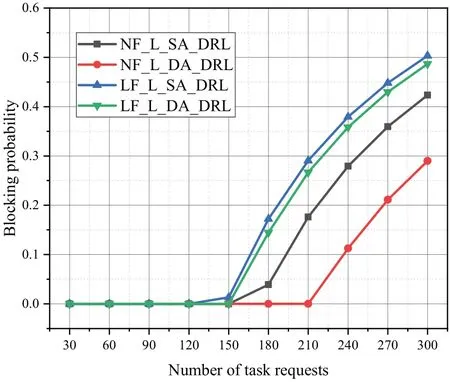

Figure 14 illustrates that the blocking rate of the NF-L-DA-DRL and NF-L-SA-DRL algorithms is lower than that of the LF-L-DA-DRL and LF-L-SA-DRL algorithms utilizing alternate offloading of links,under the same conditions.Nevertheless,when utilizing the same offloading order,the NF-L-DA-DRL algorithm utilizing the dual-agent offloading method exhibits a lower blocking rate compared to the NF-L-SA-DRL algorithm that uses a single-agent offloading method.When the number of task requests reaches 150,the blocking rate of the NF-L-SA-DRL,LF-L-DA-DRL,and LF-L-SA-DRL algorithms starts to increase signifciantly.

Figure 14.Blocking probability of different algorithms for task requests with a random number of subtasks in n11s26.

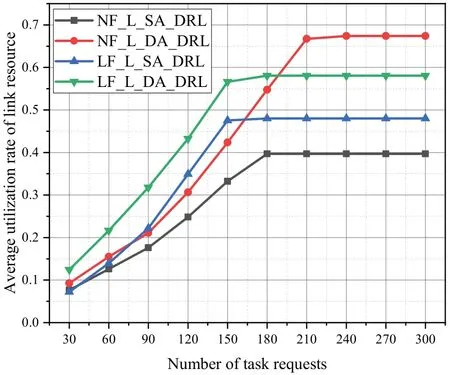

Figure 15 indicates that the average utilization rate of link resources varies across different algorithms for task requests with a random number of subtasks.The average link resource utilization of the NF-L-DA-DRL algorithm remains at a moderate level when the number of task requests is within the range of 30 to 180.In theory,lower average link resource utilization implies less link bandwidth usage by the MEC networks.With suffciient node resources,the MEC networks can handle more task requests.Based on the analysis presented in Figure 14,the NF-L-DA-DRL algorithm exhibits the lowest blocking rate and can handle the largest number of task requests under the same conditions,indicating its tendency to select the optimal offloading path.When the number of task requests is 210,the blocking rate starts to increase signifciantly while the link resource utilization rate of the NF-L-DA-DRL algorithm begins to stabilize.Hence,at a constant blocking rate,the NF-L-DA-DRL algorithm exhibits lower bandwidth cost and higher link resource utilization rate.It enables the MEC networks to provide better user QoS than the other algorithms.

Figure 15.Average utilization rate of link resource of different algorithms for task requests with a random number of subtasks in n11s26.

Figure 17.Average utilization rate of type II resource in the node of different algorithms for task requests with a random number of subtasks in n11s26.

Figure 18.Average utilization rate of type III resource in the node of different algorithms for task requests with a random number of subtasks in n11s26.

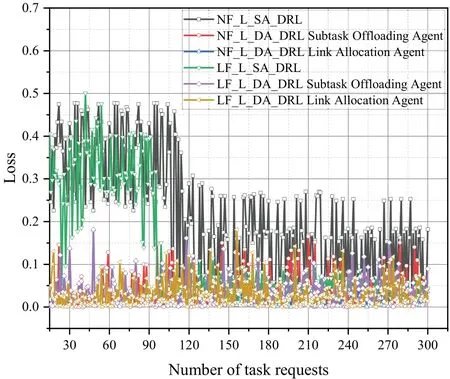

The two-stage offloading sequence in the NF-L-DA-DRL algorithm based on subtask priority yields better average node resource utilization compared to the link alternation offloading sequence,as shown in Figures 16-18.When utilizing the same offloading order,the NF-L-DA-RL algorithm using dual agents exhibits higher average node resource utilization compared to the NF-L-SA-RL algorithm using a single agent.Compared to the single agent,dual agents can extract edge node attributes and link attributes separately,leading to faster convergence to a stable state during the agent learning process.

Figure 19 presents the comparison between agent loss functions for the four algorithms.The dualagent algorithm exhibits a signifciantly faster convergence speed of the loss function when compared to the single-agent algorithm.The loss functions of the LF-L-SA-DRL and NFLSA-DRL algorithms converge at around 90 and 120 task requests,respectively.In contrast,both the subtask offloading agent and link assignment agent of the NF-L-DA-DRL and LF-L-DA-DRL algorithms exhibit convergence at around 30 task requests.As the two agents of the NF-DA-DRL algorithm receive separate feature matrices for node attributes and link attributes,the dualagent algorithm exhibits faster learning speed during the offloading process,allowing the network parameters to converge to optimal values more quickly.

Figure 19.Loss of different algorithms for task requests with a random number of subtasks in n11s26.

When the number of requested nodes is randomized,dual-agent algorithms generally have a faster running time than single-agent algorithms,as shown in Figure 20.As the number of task requests increases,the difference in running time becomes more pronounced.In Figure 20,the unit of the consumed time is seconds(s),which represents the total time taken for 300 task requests to complete offloading.The algorithms that frist offload subtasks and then allocate links experience a slightly longer running time compared to the algorithms that alternate between subtask and link offloading.Overall performance indicators,such as total benefti,blocking rate,average link resource utilization,and average node resource utilization,indicate that the algorithms that offload subtasks frist and then allocate links outperform the algorithms that alternate between subtasks and link offloading.By sacrifciing relatively little time cost,the former approach can lead to a higher total benefti.

Figure 20.Time consuming of different algorithms for task requests with a random number of subtasks in n11s26.

Therefore,we have developed the NF-L-DA-DRL algorithm for the following reasons:

•P1is indeed an NP-hard problem,although it does not strictly fall under the category of sequential decision problems.As a combinatorial optimization problem,P1 can be considered a sequential optimization problem.Leveraging the advantages of DRL in solving combinatorial optimization problems becomes highly benefciial in this context.DRL algorithms excel in handling complex task scenarios,optimizing resource allocation,and adapting to environments.

• In resource allocation and optimization problems,traditional algorithms such as heuristics,mathematical programming models (e.g.,integer programming,linear programming),genetic algorithms,and population optimization algorithms are often considered as alternatives to DRL.However,while traditional algorithms may be useful for solving NP-hard problems,they may not be as effective in addressing the specifci challenges present in problemP1.

• Furthermore,it is worth noting that DRL algorithms can transform the complexity of traditional algorithms from factorial to exponential.This means that DRL has the potential to provide exponential improvements in solving complex optimization problems compared to traditional approaches.

• Regarding the training time of DRL algorithms,it is true that they can be computationally expensive due to the substantial number of training iterations involved.However,the complexity gap between the proposed DRL algorithm and conventional algorithms relies on the specifci problem instance at hand.It is crucial to assess the trade-off between the computational time required by DRL algorithms and the quality of the solutions they offer compared to traditional algorithms.

• In our proposed NF-L-DA-DRL method,we employ a mapping technique that effectively reduces the time complexity by mapping a highdimensional space to a low-dimensional space.This optimization helps accelerate the agent’s decision-making process,thus further enhancing its effciiency.

VI.CONCLUSION

This paper focuses on emerging applications in MEC networks,which provide multi-dimensional resources to support a wide range of services.Firstly,we explore the problem of multi-resource task offloading aimed at maximizing total benefti.The problem is mathematically formulated and has been proven to be NP-hard.Based on the formulation,the proposed NF-DA-DRL algorithm leverages a dual-agent policy network with a DRL approach to make offloading decisions that consider the characteristics of tasks and the available multi-dimensional resources in MEC networks.To assess the effectiveness and scalability of the proposed NF-DA-DRL algorithm,we selected different network topologies and task request scenarios for verifciation.Finally,simulation results demonstrate that the proposed algorithm surpasses the compared algorithms in terms of total benefti and resource utilization.

ACKNOWLEDGEMENT

This work was supported in part by the National Natural Science Foundation of China under Grants 62201105,62331017,and 62075024,in part by the Natural Science Foundation of Chongqing under Grant cstc2021jcyj-msxmX0404,in part by the Chongqing Municipal Education Commission under Grant KJQN202100643,and in part by Guangdong Basic and Applied Basic Research Foundation under Grant 2022A1515110056.

杂志排行

China Communications的其它文章

- Distributed Application Addressing in 6G Network

- A Support Data-Based Core-Set Selection Method for Signal Recognition

- Actor-Critic-Based UAV-Assisted Data Collection in the Wireless Sensor Network

- Integrated Clustering and Routing Design and Triangle Path Optimization for UAV-Assisted Wireless Sensor Networks

- Joint Task Allocation and Resource Optimization for Blockchain Enabled Collaborative Edge Computing

- Stochastic Gradient Compression for Federated Learning over Wireless Network