Suitablility of Zernike moments forgeneral image retrieval

2016-09-13SHOJANAZERIHamidTENGShyhWeiLUGuojunZHANGDengshengLIUYing

SHOJANAZERI Hamid, TENG Shyh Wei, LU Guojun, ZHANG Dengsheng, LIU Ying

(1.School of Information Technology, Federation University, Churchill 3842, Australia; 2. School of Communications and Information Engineering, Xi’an University of Posts and Telecommunications, Xi’an 710121, China)

哈密德·晓佳那泽利1, 丁仕伟1, 陆国军1, 张登胜1, 刘颖2

(1.澳大利亚联邦大学 信息技术学院, 澳大利亚 丘吉尔 3842; 2. 西安邮电大学 通信与信息工程学院, 陕西 西安 710121)

Suitablility of Zernike moments forgeneral image retrieval

SHOJANAZERI Hamid1,TENG Shyh Wei1,LU Guojun1, ZHANG Dengsheng1,LIU Ying2

(1.School of Information Technology, Federation University, Churchill 3842, Australia; 2. School of Communications and Information Engineering, Xi’an University of Posts and Telecommunications, Xi’an 710121, China)

Image retrieval systems are essential tools in dealing with large volume of visual data and require effective descriptors to produce reasonable results. Zernike Moments (ZMs) have been used often in literature for object representation and retrieval. We note that most existing works tested ZMs on datasets with single objects with tight bounding boxes. We investigate the suitability of ZMs for a general image representation and retrieval. We show through theoretical analysis and experimental study that ZMs are not suitable for representation and retrieval of images, which may contain multiple objects of different sizes, at different locations and with different orientations and backgrounds.

image retrieval, Zernike moments, multimedia retrieval, image analysis

CLC Nubmer: TN911.7 Document Code: A Article ID: 2095-6533(2016)04-0034-09

一般图像检索中泽尼克矩的适用性

哈密德·晓佳那泽利1, 丁仕伟1, 陆国军1, 张登胜1, 刘颖2

(1.澳大利亚联邦大学 信息技术学院, 澳大利亚 丘吉尔 3842;2. 西安邮电大学 通信与信息工程学院, 陕西 西安 710121)

0 Introduction

Imageretrievalsystemsarebecomingaveryimportanttoolindealingwithalargevolumeofvisualinformation.Differentdescriptorssuchascolor,texture,shapeandattributedgraphsareusedtocharacterizethecontentofanimage.Therearetwomainmethodstocapturetheshapeofobjectandtheyarecontourandregionbaseddescriptors.Contour-baseddescriptors,suchasFourierdescriptors,curvaturescalespace,histogramofcentroiddistancesandchaincodescapturetheinformationfromtheboundariesofashapehowever[1-5],theinformationinsidetheshapeinteriorisneglectedinthesemethods.Region-basedmethodscapturetheglobalaspectofshapeandprovidethefeaturesfromtheentireshape.Inordertoretrieveanimagefromalargedatabase,descriptorsneedtobeinvarianttorotation,scaleandtranslation.Imagemomentsareintroducedtoasgoodcandidatesthatmeetthesecriteria.However,geometricimagemomentssufferfrominformationredundancyandhighcomputationalcomplexity.ZMsintroduced[6]byusingorthogonalbasisfunctionclaimedtoaddresstheproblemwithinformationredundancyandcomputationalcomplexityofgeometricmoments.

ZMshavebeenusedinmanyapplicationsfrompatternrecognitiontoimageretrievalintheliterature[7-15].OneoftheoriginalworkappliedZMsforimagerecognition[14].ZMshavealsobeenusedforimageretrieval[7-10,12-13]wherethemagnitudesofZMsareusedasthefeaturevectors,andthemodifiedphaseinformationhasalsobeenincorporatedtoimprovetheaccuracyofZMs[9].Severalresearchers[16-17]havestudiedtheaccuracyofZMsfromtheaspectofmathematicalcalculations.

Sofar,alltheaforementionedresearchusingZMsforimageretrievalpurposehasevaluatedtheirproposedmethodsusingdatabaseswithsingleobjectsoccupyingentireimages.Ingeneralimageretrieval,imagesmaycontainmultipleobjectsofdifferentsizes,atdifferentlocationsandwithdifferentorientationsandbackgrounds.ThisresearchaimstoinvestigatethesuitabilityofZMsforgeneralimageretrieval.Throughtheoreticalanalysisandexperimentalstudy,weconcludethatZMsarenotsuitableforgeneralimageretrieval.

Thispaperisorganizedasfollows:Section1providestheanalysisonZMscalculationandhighlightsthelimitationsusingZMsingeneralimageretrievalapplication.Section2providesdetailsofexperimentalstudyofdifferentimageconditions.Section3providestheconclusionofthiswork.

1 Zernike moments and suitability for image retrieval

ZMs introduce a set of continuous image moments and a set of complex polynomials denoted by {Vn,m} that form a complete orthogonal set over the unit disk ofX2+Y2=1 in polar coordinates. The Zernike polynomial is defined as

Vn,m(x,y)=Vn,m(ρ,θ)=Rn,m(ρ)ejmθ,

(1)

wherenis a positive integer or zero;mrepresents positive or negative integers and is subject to constraints:n-|m| is even and |m|≤n.ρis the length of the vector from the origin to pixel (x,y).θrepresents the angle between the vectorρand thex-axis in the counterclockwise direction. For digital images, ZMs are calculated using the following equation

(2)

As the ZMs are only rotation invariant, to achieve the translation and scaling invariances, image normalization should be applied by employing following equation

(3)

wherexc,ycare the centroid (center of the mass) of the image that are calculated using the following equations

(4)

(5)

Fig.1 Unit circle of image for ZMscalculation

Theabovecalculationisinvarianttorotation,scalingandtranslationonlywhenimagescontaintightlyboundedsingleobjects.

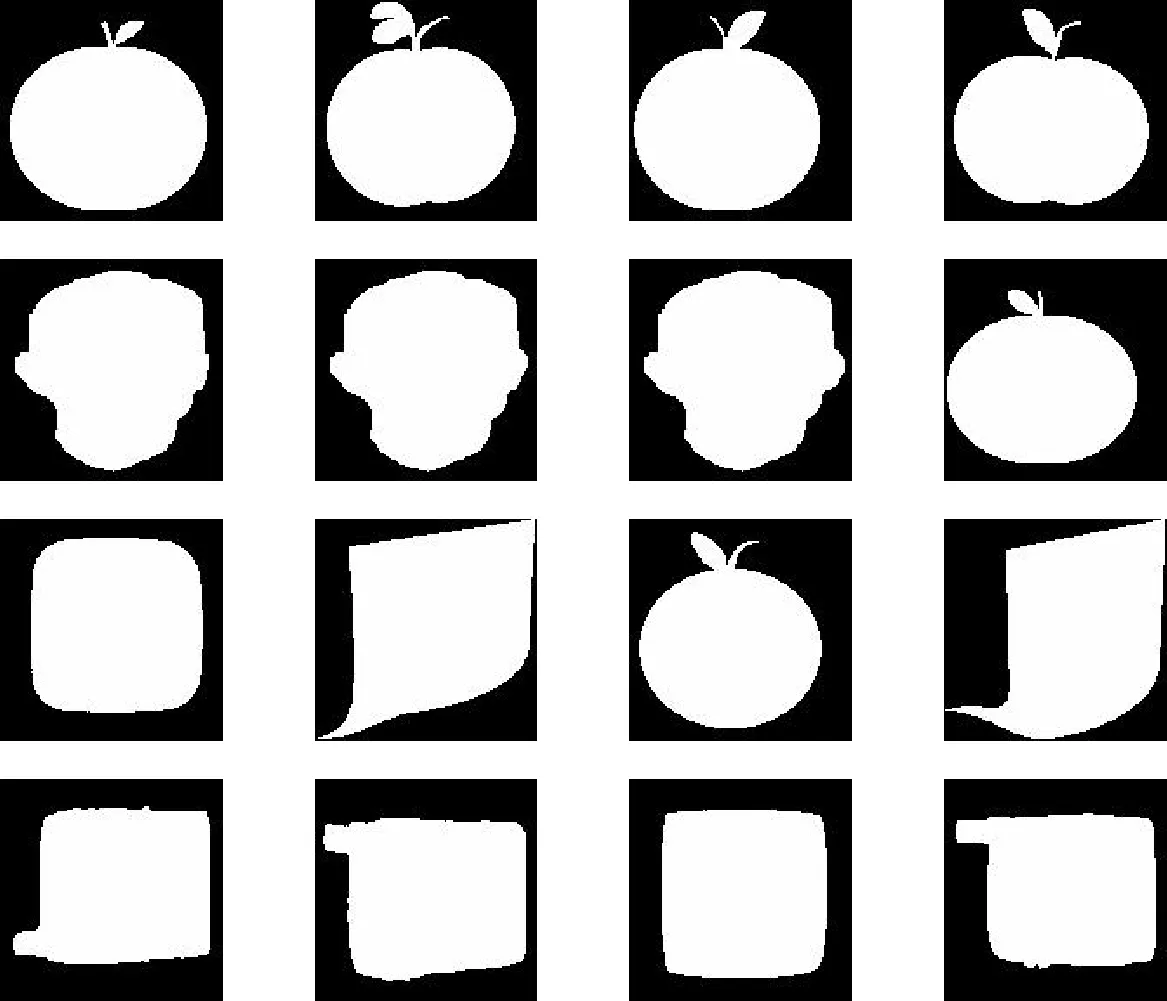

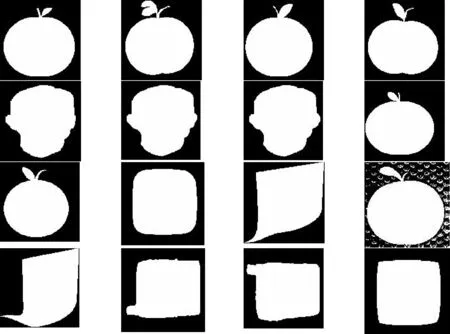

Inrealimageretrievalapplication,imagesmaycontainmultipleobjectsofdifferentsizes,atdifferentlocationsandwithdifferentorientationsandbackground,andmainobjectsinimagescannotbeaccuratelysegmented.Inthiscase,theaboveZMcalculationisnotinvariant.Specifically,weconsiderthefollowingsituations.

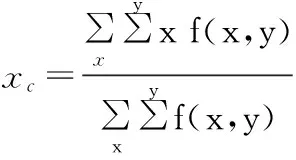

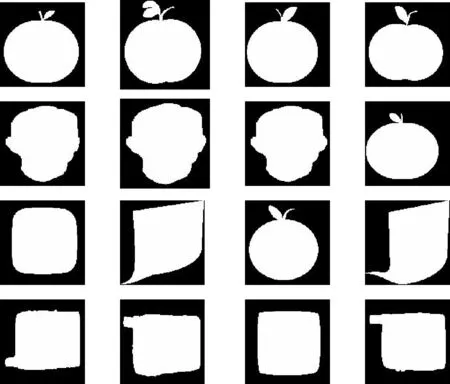

(1)Imagescontainingmultipleobjects(Fig.2(b)):thecentroidandf(x,y)aredifferentfromtheoriginalimage(Fig.2(a)).

(2)Imagescontainingobjectsofdifferentsizes(Fig.2(c)): f(x, y)isdifferentfromtheoriginalimage(Fig.2(a)).

(3)Imagescontainingobjectsofdifferentsizeandatdifferentlocation(Fig.2(d)):thecentroidandf(x,y)aredifferentfromtheoriginalimage(Fig.2(a)).

(4)Imagescontainingobjectsofdifferentsizeandorientation(Fig.2.(e)):thecentroidandf(x,y)aredifferentfromtheoriginalimage(Fig.2(a)).

(5)Imagescontainingobjectondifferentbackground(Fig.2(f)):thecentroidandf(x,y)aredifferentfromtheoriginalimage(Fig.2(a)).

Visually,thesiximagesinFigure2aresimilar.Soideally,usinganyofthesiximagesasaquery,theotherfiveimagesshouldberetrievedandrankedhighly(assimilar).Butduetothedifferenceinthecentroidlocationsand/orf(x, y)aslistedabove,theZMsofthesiximagesareverydifferent,leadingtolargedistancesamongthesiximagesandlowimageretrievalaccuracy.

Fig.2 Different situations in real image retrieval application

We will use experimental examples to show the impact of the above five situations on retrieval accuracy.

2 Experimental study

In the literature, several research[7-10,12-13]exploited ZMs for image retrieval, however their proposed methods are evaluated using databases containing images and each image has only a single object. The object generally islocated at the center of the image and is tightly bounded. The MPEG 7 CE shape database[7-10,13], COIL100 database, kimia[8-9]are the frequently used databasesas the benchmark in suchresearch. To investigate the suitabilityof ZMs in retrieving general images from a database, we have selected the MPEG 7 CE shape database. This database contains 1400 images that are grouped into 70 classes. ZMs order 10 is used in this work as recognized as sufficient for image presentation by [8-9,13]. The performance is measured using precision and recall[18]as this is one of the most commonly usedmeasures in image retrieval research.

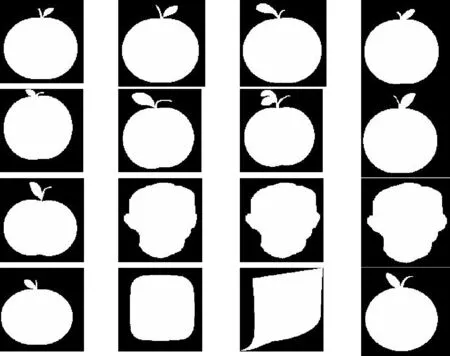

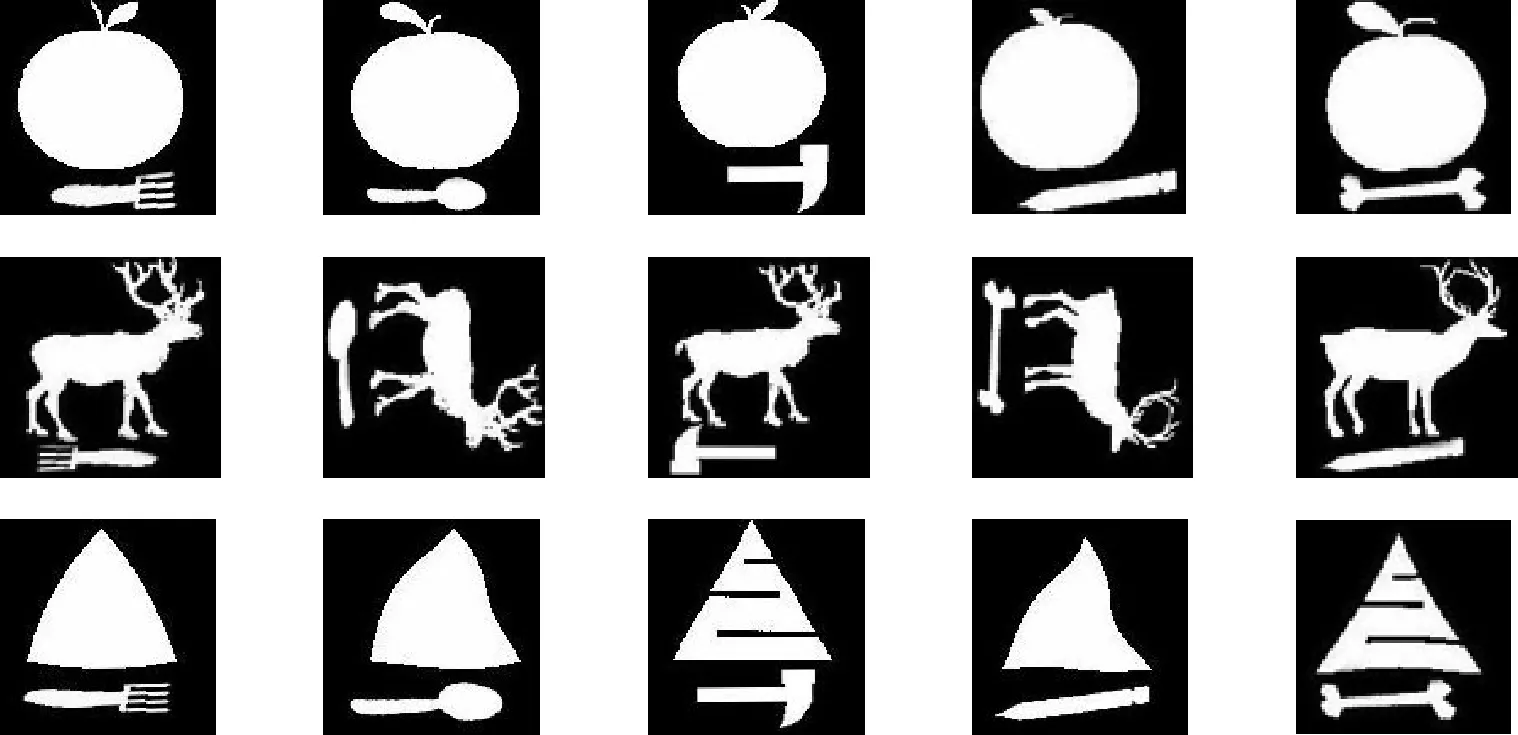

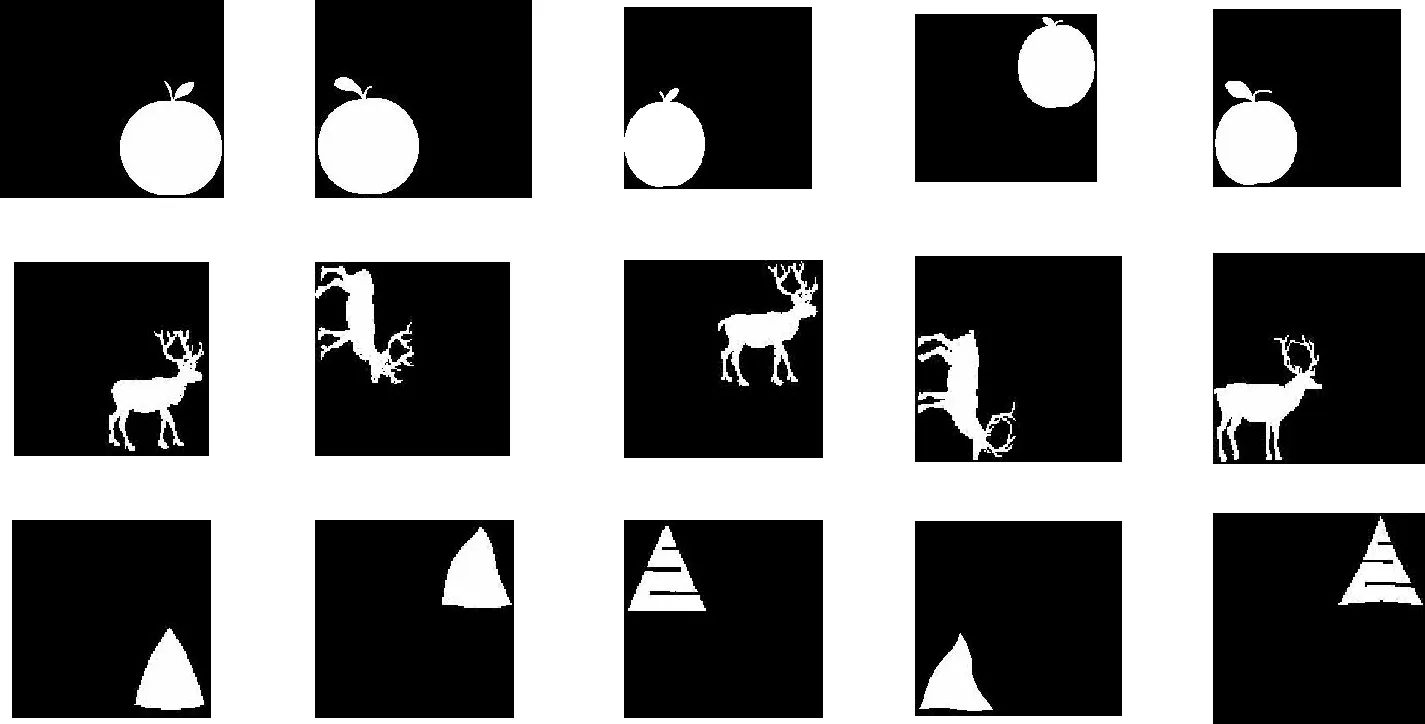

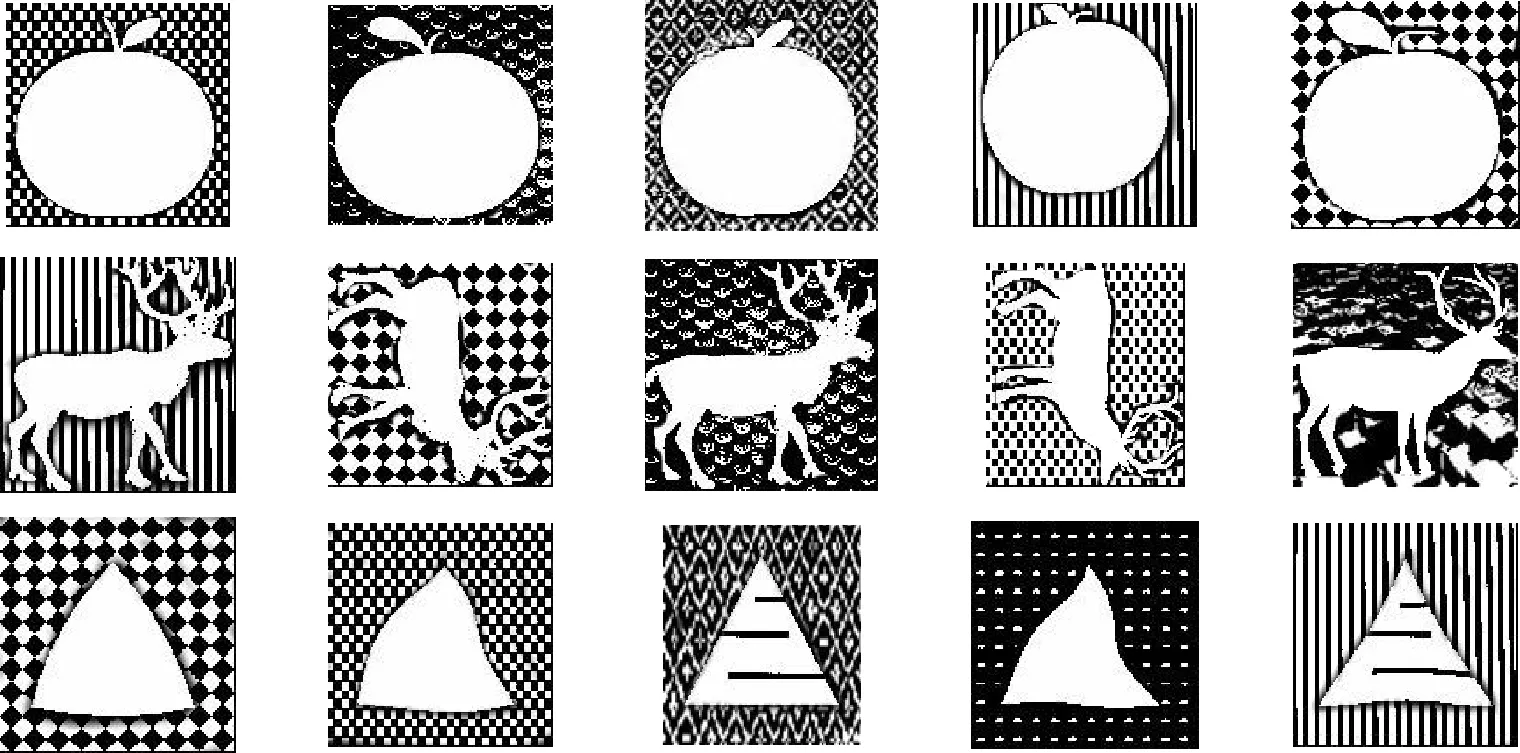

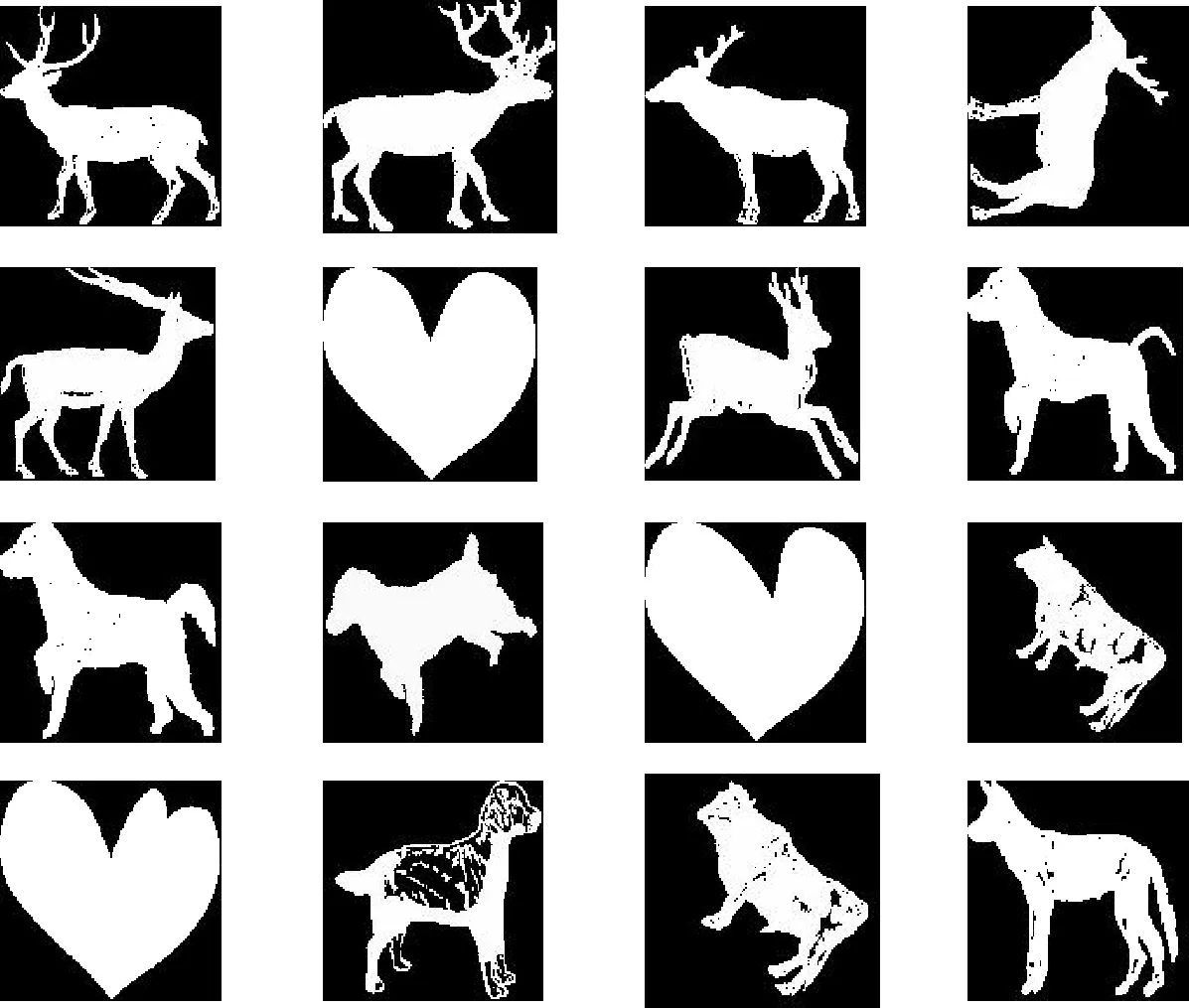

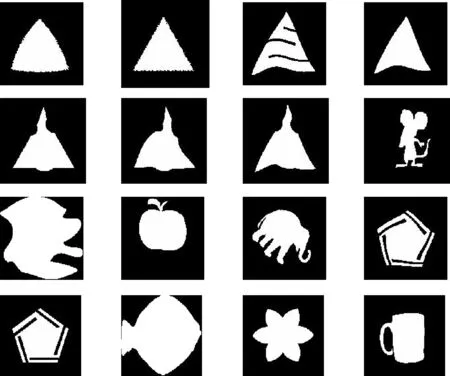

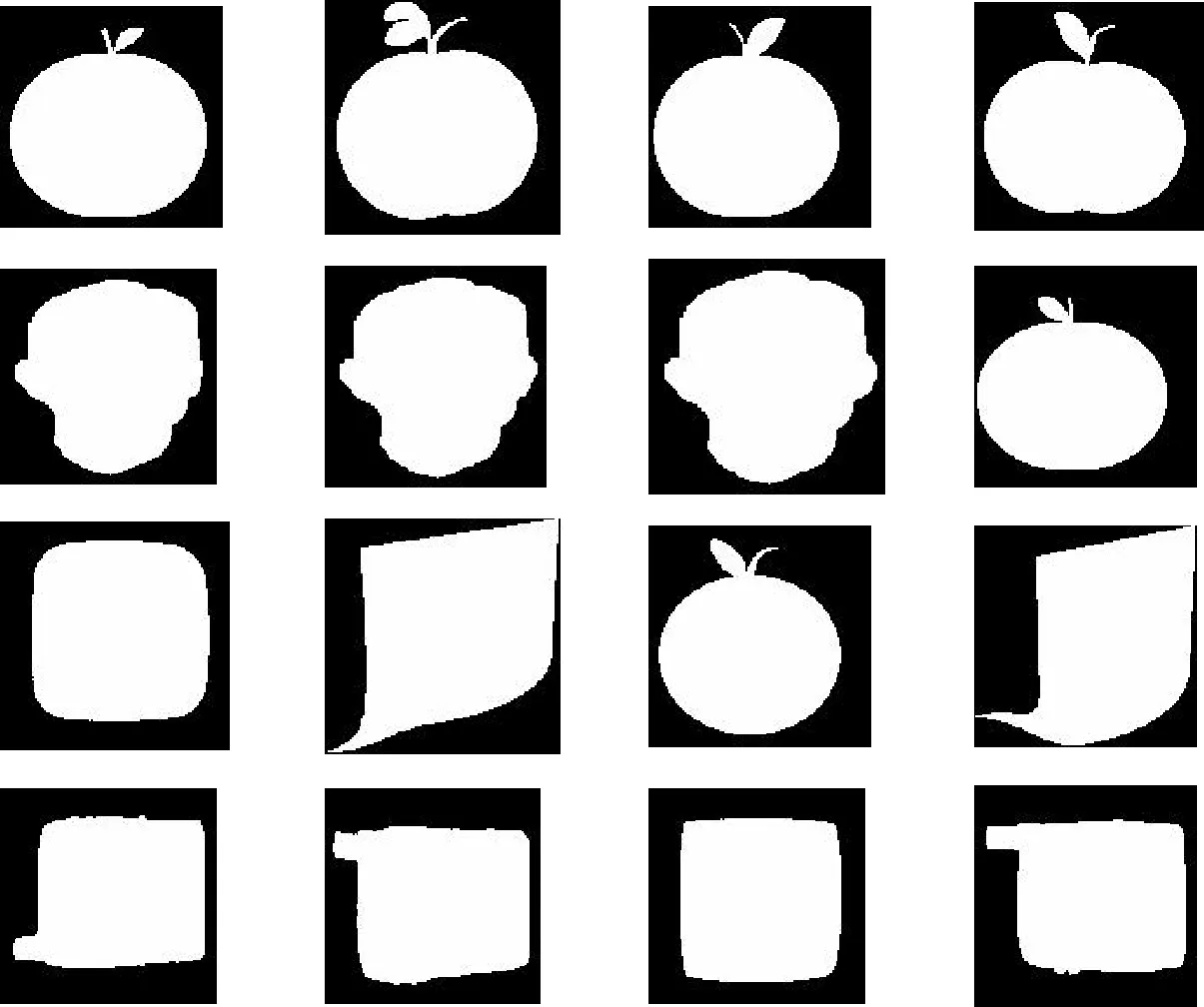

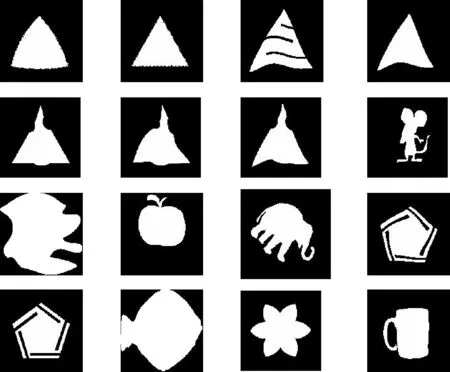

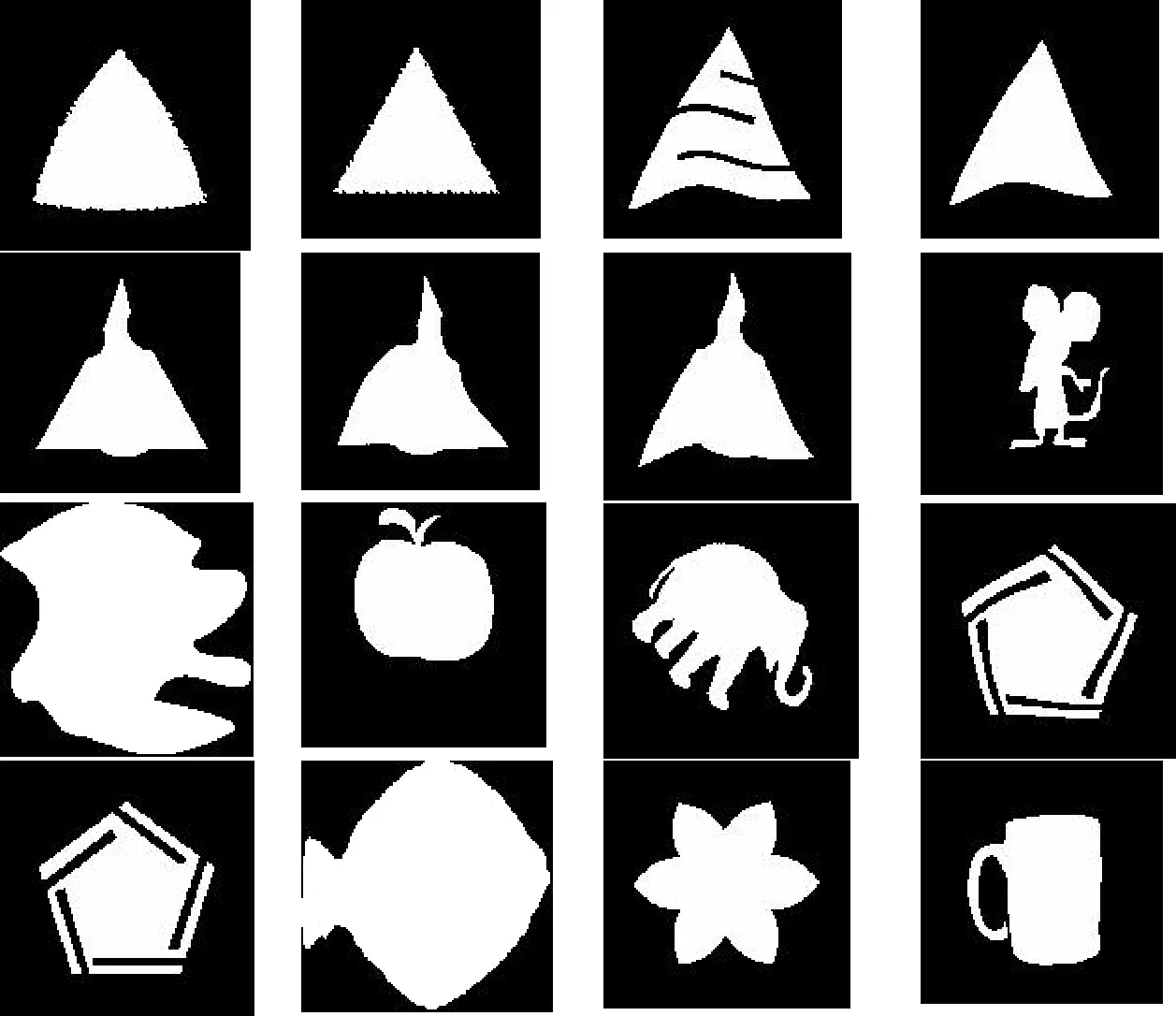

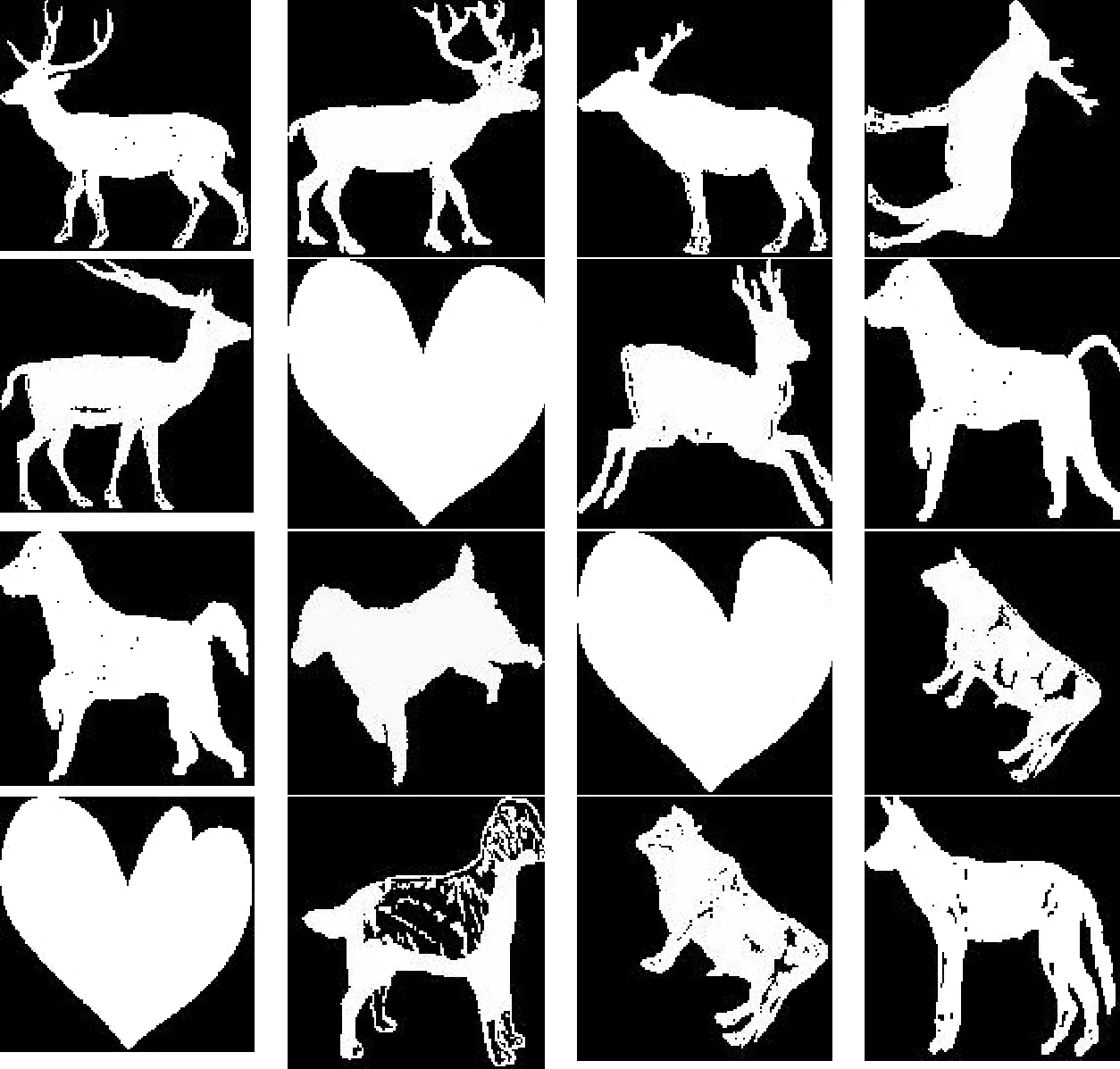

The limitations of ZMs discussed in Section 1 are studied here by using the following experiments. Three classes of images are selected from the MPEG 7 databasenamelyApple,DeerandDevice4. Fig.3, Fig.4, and Fig.5 shows the retrieval results of the selected classes using the ZMs order 10 using the original data set. The left-most image at the first row is the query image and the rest are the fifteen highest ranked retrieved images.

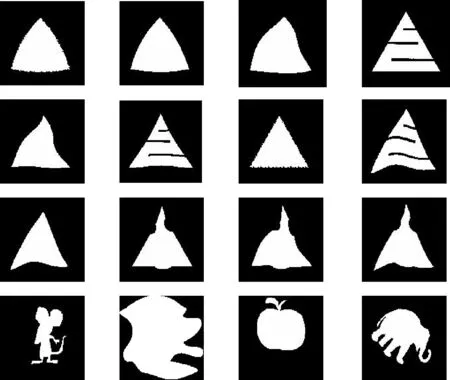

To analyze the limitations of ZMs discussed in the previous section, fivehighest ranked of the retrieved images presented in Fig. 3, Fig.4 and Fig. 5 are modifiedconsidering the following situations, images containingmultiple objects, objects of different sizes, at different locations, orientations and backgrounds. Fig.6 to 10 present these modified images, these images are visually similarto five highest ranked of the retrieved images presented in Fig.3 to 5. The retrieval results after adding these modified visually similar images are presented to highlight the effect of them.

Fig.3 Retrieval result of apple class using ZMs order 10

Fig.4 Retrieval result of deer class using ZMs order 10

Fig.5 Retrieval result of device 4 class using ZMs order 10

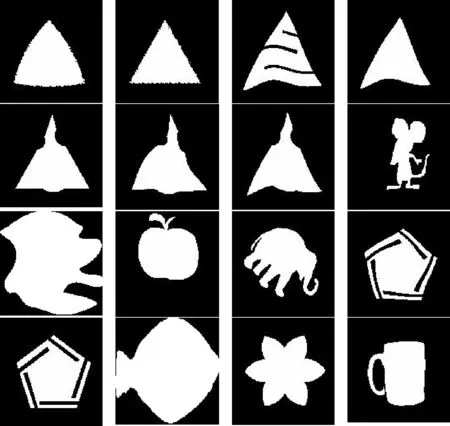

Fig.6 Visually similarimages to five highest ranked retrieved images with multiple objects

Fig.7 Visually similar images to five highest ranked retrieved images with different sizes

Fig.8 Visually similar images to five highest ranked retrieved images with objects different size at different locations

Fig.9 Visually similar images to five highest ranked retrieved images with objects of different sizes and orientations

Fig.10 Visually similar images to five highest ranked retrieved images with different backgrounds

2.1Images with multiple objects

In this experiment, we added modified images in Fig.6 to the test data set, and used the three queries (apple, dear and device 4) to retrieve similar images. Fig.11 to 13 show the ranked retrieval results. Although the images in Fig.6 are visually similar to the respective queries, they are ranked lower than images from other classes, except for one of the deer images, whichis ranked 11. This result shows that adding a small object to the original object will affect the ZM features and reduce retrieval accuracy.

Fig.11 Retrieval results of apple class

Fig.12 Retrieval results of deer class

Fig.13 Retrieval results of device 4 class

2.2Images with objects of different sizes

In this experiment, we added modified images in Fig.7 to the test data set, and used the three queries (Apple, Deer and Device 4) to retrieve similar images.Fig.14 to 16 show the ranked retrieval results. Although the image in Fig.7 is visually similar to the respective queries, they are ranked lower than images from other classes. This result shows that objects of different sizes will affect the ZM features and reduce retrieval accuracy.

Fig.14 Retrieval results of apple class

Fig.15 Retrieval results of deer class

Fig.16 Retrieval results of device 4 class

2.3Images with objects at different locations

In this experiment, we added modified images in Fig.8 to the test data set, and used the three queries (Apple, Deer and Device 4) to retrieve similar images. Fig.17 to 19 show the ranked retrieval results. Although the images in Fig.8 are visually similar to the respective queries, they are ranked lower than images from other classes. This result shows that objects of different sizes at different locations will affect the ZM features and reduce retrieval accuracy.

Fig.17 Retrieval results of apple class

Fig.18 Retrieval results of deer class

Fig.19 Retrieval results of device 4 class

2.4Images with objects at different locations

In this experiment, we added modified images in Fig.9 to the test data set, and used the three queries (Apple, Deer and Device 4) to retrieve similar images. Fig.20 to 22 show the ranked retrieval results. Although the images in Fig.9 are visually similar to the respective queries, they are ranked lower than images from other classes, except for one of the deer image, which is ranked 12. This result shows that objects of different sizes and orientations will affect the ZM features and reduce retrieval accuracy.

Fig.20 Retrieval results of apple class

Fig.21 Retrieval results of deer class

Fig.22 Retrieval results of device 4 class

2.5Images with objects at different locations

In this experiment, we added modified images in Fig.10 to the test data set, and used the three queries (Apple, Deer and Device 4) to retrieve similar images. Fig.23 to 25 show the ranked retrieval results. Although the images in Fig.10 are visually similar to the respective queries, they are ranked lower than images from other classes, except for one of the apple image, which is ranked 11. This result shows that images containing object on different background will affect the ZM features and reduce retrieval accuracy.

Fig.23 Retrieval results of apple class

Fig.24 Retrieval results of deer class

Fig.25 Retrieval results of device 4 class

The experimental study shows that the retrieval results of selected classes are affected by changes in having multiple objects with different sizes, at different locations, orientations and backgrounds. The aforementioned modified visually similar images in Fig.6 to 10 that are added to the database are mostly not in the top 15 retrieved and are ranked lower than images from other classes.

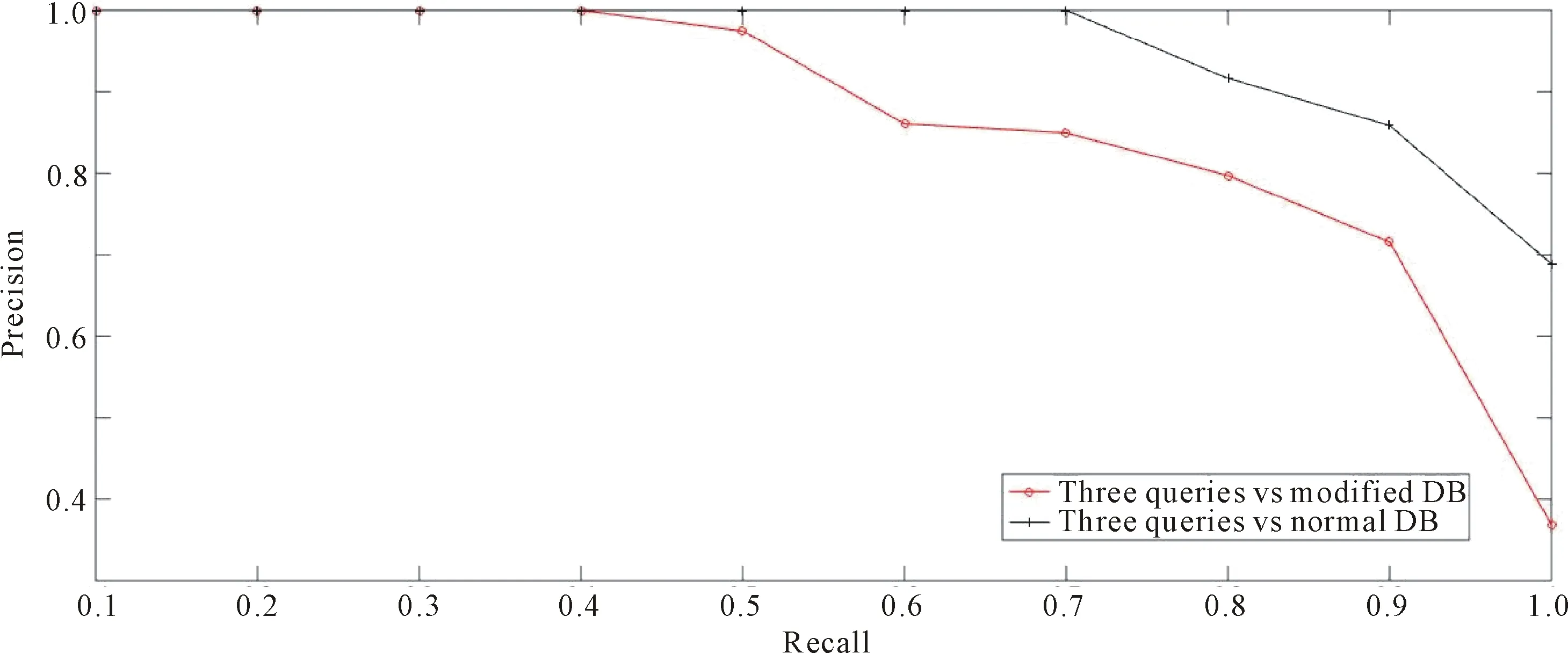

2.6Images with objects at different locations

Fig.26 shows the retrieval result of the three queries (Apple, Deer and Device 4)using original database and modified databases as per used through Sections 2.2 to 2.5. The retrieval accuracy is considerably reduced as seen from the Precision and Recall graph for the modified databases.This is the result of the modificationsdiscussed in Section 1, images with multiple objects, objects of different sizes, at different locations, with different orientations and backgrounds that change the center of the mass of images and consequently ZM feature vectors.

Fig.26 Retrieval results of three queries(Apple,Deer and Device 4) against original database and modified databases

3 Conclusion

ZMshave been used for image retrieval, howeverthey have been evaluateddatabases with single object in the center of the image and tightly bounded. ZMs are calculated within a unit circle, and the centered at thecenter of the mass of images; hence any changes in the image will result in changes in the center of the mass and different ZM feature vectors that affect the retrieval results.

Asgeneral images might have different objects with different sizes, locations, orientations and backgrounds, any of these situations changes the center of the mass of image and/or pixel values. This work studied how this calculationof ZMs affect the retrieval performance and our experimental results have shown that the results are considerably affected in presence of any aforementioned situations. ThereforeZMs are not suitable for general image retrieval, unlessrobust image segmentation is available as a preprocessing step. Unfortunately, based on our understanding, there is no automatic segmentation technique currently exists that is generally robust in segmenting objects from general images into the manner required by ZMs to work effectively.

Reference

[1]ZHANG D, LU G. A Comparative study of curvature scale space and Fourier descriptors for shape-based image retrieval[J/OL]. Journal of Visual Communication and Image Representation, 2003;14(1):39-57[2016-02-02].http://dx.doi.org/10.1016/S1047-3203(03)00003-8.

[2]MOKHTARIAN F, MACKWORTH A K. A theory of multiscale, curvature-based shape representation for planar curves[J/OL]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 1992,14(8):789-805[2016-02-02].http://dx.doi.org/10.1109/34.149591.

[3]ZHANG D S, LU G J. A comparative study of Fourier descriptors for shape representation and retrieval[C/OL]//Proceedings of 5th Asian Conference on Computer Vision (ACCV2002). Australia Melbourne:Springer, 2002:646-651[2016-02-02].http://staff.itee.uq.edu.au/lovell/aprs/accv2002/accv2002_proceedings/Zhang646.pdf.

[4]DUBIOS S R, GLANZ F H. An autoregressive model approach to two-dimensional shape classification[J/OL]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 1986,8(1):55-66[2016-02-02].http://dx.doi.org/10.1109/TPAMI.1986.4767752.

[5]ATTALLA E, SIY P. Robust shape similarity retrieval based on contour segmentation polygonal multiresolution and elastic matching[J/OL]. Pattern Recognit, 2005,38(12):2229-2241[2016-02-02].http://dx.doi.org/10.1016/j.patcog.2005.02.009.

[6]TEAGUE M R. Image analysis via the general theory of moments[J/OL]. Journal of Optical Society of America, 1980,70(8):920-930[2016-02-02].http://dx.doi.org/10.1364/JOSA.70.000920.

[7]ZHANG D S, LU G J. Improving retrieval performance of Zernike moment descriptor on affined shapes[C/OL]//Multimedia and Expo, 2002. ICME’02. Proceedings. 2002 IEEE International Conference on: vol. 1. Switzerland Lausanne:IEEE,2002:205-208[2016-02-02].http://dx.doi.org/10.1109/ICME.2002.1035754.

[8]SINGH C. Local and global features based image retrieval system using orthogonal radial moments[J/OL]. Optics and Lasers in Engineering, 2012,50(5):655-667[2016-02-02].http://dx.doi.org/10.1016/j.optlaseng.2011.11.012.

[9]LI S, LEE M C, PUN C M. Complex Zernike moments features for shape-based image retrieval[J/OL]. IEEE Transactions on Systems, Man and Cybernetics, Part A: Systems and Humans, 2009,39(1):227-237[2016-02-02].http://dx.doi.org/10.1109/TSMCA.2008.2007988.

[10] KIM W Y, KIM Y S. A region-based shape descriptor using Zernike moments[J/OL]. Signal Processing: Image Communication, 2000,16(1/2):95-102[2016-02-02].http://dx.doi.org/10.1016/S0923-5965(00)00019-9.

[11] BELKASIM S O, SHRIDHAR M, AHMADI M. Pattern recognition with moment invariants: a comparative study and new results[J/OL]. Pattern Recognition, 1991,24(12):1117-1138[2016-02-02].http://dx.doi.org/10.1016/0031-3203(91)90140-Z.

[12] NOVOTNI M, KLEIN R. 3D Zernike descriptors for content based shape retrieval[C/OL]//SM’03 Proceedings of the eighth ACM symposium on Solid modeling and applications. New York: ACM, 2003:216-225[2016-02-02].http://dx.doi.org/10.1145/781606.781639.

[13] KIM H K, KIM J D, SIM D G, et al. A modified Zernike moment shape descriptor invariant to translation, rotation and scale for similarity-based image retrieval[C/OL]//Multimedia and Expo, 2000. ICME 2000. 2000 IEEE International Conference on(Volume:1). New York: IEEE,2000:307-310[2016-02-02].http://dx.doi.org/10.1109/ICME.2000.869602.

[14] KHOTANZAD A, HONG Y H. Invariant image recognition by Zernike moments[J/OL]. IEEE Transactions on Pattern Analysis and Machine Intelligence,1990,12(5):489-497[2016-02-02].http://dx.doi.org/10.1109/34.55109.

[15] WANG L, HEALEY G. Using Zernike moments for the illumination and geometry invariant classification of multispectral texture[J/OL]. IEEE Transactions on Image Processing, 1998,7(2):196-203[2016-02-02].http://dx.doi.org/10.1109/83.660996.

[16] XIN Y, PAWLAK M, LIAO S. Accurate computation of Zernike moments in polar coordinates[J/OL]. IEEE Transactions on Image Processing, 2007,16(2):581-587[2016-02-02].http://dx.doi.org/10.1109/TIP.2006.888346.

[17] LIAO S X, PAWLAK M. On the accuracy of Zernike moments for image analysis[J/OL]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 1998,20(12):1358-1364[2016-02-02].http://dx.doi.org/10.1109/34.735809.

[18] TENG S W, LU G J. Image indexing and retrieval based on vector quantization[J/OL]. Pattern Recognit, 2007,40(11):3299-3316[2016-02-02].http://dx.doi.org/10.1016/j.patcog.2007.01.029.

[Edited by: Ray King]

10.13682/j.issn.2095-6533.2016.04.007

图像检索系统是处理大量视觉数据的必要和基本工具。有效的图像检索需要适当的图像描述。泽尼克矩经常被用于图像描述和检索。现有文献在对泽尼克矩的有效性进行测试时,大多数所用图像数据集都比较简单。研究泽尼克矩对一般图像(其可含有不同尺寸的物体,可在不同的位置,具不同的方向和背景)描述和检索的适用性,通过理论分析和实验测试可知,泽尼克矩并不适用于对一般图像的描述和检索。

图像检索;泽尼克矩;多媒体检索;图像分析

on:2016-01-20

Supported by:Australian Research Council(DP130100024)

Contributed by:Shojanazeri, Hamid(1984-), PhD Candidate, engaged in computer scinece and application research. E-mail: hamid.nazeri2010@gmail.com

Teng, Shyh Wei(1973-), PhD, senior lecturer, engaged in computer scinece and application research. E-mail:shyh.wei.teng@federation.edu.au