Clothing Parsing Based on Multi-Scale Fusion and Improved Self-Attention Mechanism

2023-12-28CHENNuoWANGShaoyu王绍宇LURanLIWenxuan李文萱QINZhidong覃志东SHIXiujin石秀金

CHEN Nuo(陈 诺), WANG Shaoyu(王绍宇), LU Ran (陆 然), LI Wenxuan(李文萱), QIN Zhidong(覃志东), SHI Xiujin(石秀金)

College of Computer Science and Technology, Donghua University, Shanghai 201620, China

Abstract:Due to the lack of long-range association and spatial location information, fine details and accurate boundaries of complex clothing images cannot always be obtained by using the existing deep learning-based methods. This paper presents a convolutional structure with multi-scale fusion to optimize the step of clothing feature extraction and a self-attention module to capture long-range association information. The structure enables the self-attention mechanism to directly participate in the process of information exchange through the down-scaling projection operation of the multi-scale framework. In addition, the improved self-attention module introduces the extraction of 2-dimensional relative position information to make up for its lack of ability to extract spatial position features from clothing images. The experimental results based on the colorful fashion parsing dataset (CFPD) show that the proposed network structure achieves 53.68% mean intersection over union (mIoU) and has better performance on the clothing parsing task.

Key words:clothing parsing; convolutional neural network; multi-scale fusion; self-attention mechanism; vision Transformer

0 Introduction

In recent years, with the continuous development of the garment industry and the increasing improvement of e-commerce platforms, people have gradually started to pursue the personalization of clothing matching. Virtual fitting technology enables consumers to accurately obtain clothing information and achieve the matching of clothing. At the same time, designers can obtain information about consumers’ shopping preferences through trend forecasting to grasp current fashion trends. For this purpose, parsing techniques that can extract various types of fashion items from complex images become a prerequisite to achieve the goal. However, clothing parsing is limited by various factors such as different clothing styles, models and environments. To solve this problem, researchers have proposed their own solutions from different perspectives of pattern recognition[1-2]and deep learning[3-6].

Recently, researchers have tried to introduce a model named Transformer to vision tasks. Dosovitskiyetal.[7]proposed vision Transformer (ViT) which divides an image into multiple patches and inputs the sequence of linear embeddings of these patches with position embedding into Transformer. This achieves a surprising performance on large-scale datasets. On this basis, researchers explored the integration of Transformer into traditional encoder-decoder frameworks to adapt to image parsing tasks. Zhengetal.[8]proposed segmentation Transformer (SETR) which consists of a pure Transformer and a simple decoder to confirm the feasibility of ViT for image parsing tasks. Inspired by frameworks such as SETR, Xieetal.[9]proposed SegFormer which does not require positional encoding and avoids the use of complex decoders. Liuetal.[10]improved the patch form of ViT and proposed Swin Transformer which achieves better results by sliding windows and relative positional encoding.

Despite the great promise and potential of applying Transformer to image parsing, there are still several challenges that are difficult to ignore when applying the Transformer architecture to parse clothing images. First, due to the time complexity limitation, current Transformer architectures use the flattened segmented patch of an image as the input sequence for self-attention or input the low-resolution feature map of the convolutional backbone network into the Transformer encoder. However, for complex clothing images, feature maps of different scales can affect the final parsing results. Second, the pure Transformer performs poorly on small-scale datasets due to its lack of inductive bias for visual tasks. The clothing parsing task, on the other hand, lacks large-scale data to meet the data requirement of the pure Transformer due to its rich variety and high resolution of practical applications.

In this paper, we propose a network named MFSANet based on multi-scale fusion and improved self-attention. MFSANet combines the respective advantages of convolution and self-attention for clothing parsing. We refer to the framework design of the high-resolution network (HRNet)[11]to extract and exchange the long-range association information and position information in each stage. MFSANet achieves good results on a generalized clothing parsing dataset and is promising to achieve good generalization in downstream tasks.

1 Related Work

1.1 Multi-scale fusion

Convolutional neural networks applied to image parsing essentially abstract the target image layer by layer to extract the features of each layer of the target. Since Zeileretal.[12]proposed the visualization of convolutional neural networks to formally describe the differences between the feature maps of deep and shallow networks in terms of geometric and semantic information representation, more and more researchers have focused on multi-scale fusion networks. Linetal.[13]proposed the feature pyramid network (FPN) containing a multi-scale structure with a pyramidal structure. It was a model for the design of subsequent pyramidal networks by sampling the features at the bottom layer and then recovering and fusing the features layer by layer to obtain high-resolution features. Chenetal.[14]proposed DeepLab with atrous convolution to obtain multi-scale information by adjusting the receptive field of the filter. HRNet with a parallel convolution structure maintains and aggregates features at each resolution, thus enhancing the extraction of multi-scale features. Inspired by the strong aggregation capability of the HRNet framework, we incorporate long-range association information of the Transformer to achieve clearer parsing.

1.2 Transformer

As the first work to transfer Transformer to image tasks with fewer changes and achieve state-of-the-art results on large-scale image datasets, ViT split the image into multiple patches with positional embeddings attached as sequences fed into the Transformer’s encoder. Based on that, Wangetal.[15]constructed the pyramid vision Transformer (PVT) with a pyramid structure, demonstrating the feasibility of applying Transformer to multi-scale fusion structures. Chuetal.[16]proposed Twins which enhanced the Transformer’s representation of hierarchical features without an absolute position embedding, optimizing the Transformer performance on dense prediction tasks.

2 Methods

2.1 Overall architecture

Figure 1 highlights the overall structure of MFSANet. We combine the characteristics of convolution and Transformer to extract local features and long-range information and use the multi-scale fusion structure to achieve information exchange. Meanwhile, the inductive bias of the convolution part of the hybrid network can effectively alleviate the lack of large-scale sample training of Transformer. We choose to include the Transformer module in the high-resolution feature maps that have participated in more multi-scale fusion to make more use of the information from multiple scales and incorporate long-range association information.

2.2 Multi-scale representation

Unlike the linear process of hybrid networks such as SETR and SegFormer, our network implements multi-scale parallel feature extraction for the input image. These multi-scale features simultaneously cover different local features of the image at different scales, which can fully extract the rich semantic information in the clothing images to improve the performance of parsing. After the parallel feature extraction is completed, the fusion operation will also be used to exchange multi-scale information to assist in generating feature maps at each scale by using high- and low-resolution semantic information, so that the feature maps at each scale contain richer information. Specifically, for stagek∈{2,3,4} represented as the blue region in Fig.1, given a multi-scale input feature map, the output of the stageX′ is expressed as

(1)

MHSA——multi-head self-attention; MLP——multi-layer perception.

whereXiis the input feature map of theith scale;Fusionmeans the fusion operation of the feature map;BasicBlockindicates the corresponding residual or Transformer operation. Each stage receives all the scale feature map output from the previous stage and generates the input feature map of the next stage after processing and fusion. As shown in Fig.1, there are two basic blocks inside the stages,i.e., the Transformer basic block and the residual basic block. The former is responsible for the long-range association information extraction of the high-resolution feature maps. The latter is responsible for the local feature information extraction of the low- and medium-resolution feature maps and completing the information exchange in the multi-scale fusion. In particular, the high-resolution feature map of stage 1 also has to pass through the residual basic block to complete the local feature information extraction of the high-resolution feature map.

2.3 Improved self-attention mechanism

The standard Transformer is built on the basis of the Multi-Head Attention (MHA) module[17], which uses multiple heads to compute self-attention synchronously, and finally concatenates and projects to complete the computation, thus enabling the network to jointly focus on information from different representation subspaces at different locations. For simplicity, the subsequent descriptions are developed based on a single attention head. The standard Transformer takes the feature mapX∈RC×H×Was input, whereCis the number of channels, andHandWare the height and width of the feature map. After projecting and flattening,Xgets the input of self-attention,Q,K,V∈Rn×d, whereQ,KandVare sequences of Query, Key and Value, respectively;n=H×W;dis the sequences’ dimension of each head. The key to self-attention is a scaled dot-product and it is computed as

The self-attention mechanism builds the correlation matrix between sequences by matrix dot product, thus obtaining long-range association information. However, this matrix dot product also bringsO(n2d) complexity, wherenis much larger than the usual image size in the field of high-resolution images such as clothing images, which leads to the fact that the self-attention in high-resolution images will be limited by the size. The square level of complexity is unacceptable for the current clothing images which are often of millions of pixels.

For clothing images, most of the local regions within the image have highly approximate features, which leads to many redundant parts for inter-pixel association computation on the global. Meanwhile, the theory proves that the contextual association matrixPin the self-attention mechanism is of low rank for long sequences[18],

Based on this, we design a self-attention mechanism for high-resolution images, as shown in Fig.2, and introduce a dimensionality reduction projection operation to generate equivalent sequencesKr=projection(K)∈Rnr×dandVr=Projection(V)∈Rnr×d, wherenr=Hr×Wr≪n;HrandWrare the reduced height and weight of input after projecting, respectively. The modified formula is

Fig.2 Improved self-attention mechanism architecture

By the dimensionality reduction projection operation, we reduce the complexity of the matrix computation toO(nnrd) without having a large impact on the Transformer’s effect, thus allowing it to adapt to the high-resolution input of the clothing image. The complexity of the input width and height, after being reduced to the fixed value or proportionally smaller values, has been able to allow for self-attention operations at high resolution.

In the field of vision, there are controversies about the role of relative and absolute position encoding for the Transformer, which led us to explore the application of relative position encoding on clothing parsing. For clothing images, their highly structured characteristics make their location features rich in detailed information. However, the standard Transformer is permutation equivariant, which cannot extract location information. Therefore, we refer to the two-dimensional relative position encoding[19]to introduce a complement to the relative position information. The attention logit using relative position from pixeli=(ix,iy) to pixelj=(jx,jy) is formulated as

(2)

whereqiandkjare the related query vector and key vector;rW, jx-ixandrH, jy-iyare learning embeddings for relative width and relative height, respectively. Similarly, the calculation of the relative position information is also subject to the dimensionality reduction projection operation. As shown in Fig.2,RHandRW, the corresponding learned embeddings, are added to the self-attention mechanism after the projection and aggregation operations. Therefore, the fully improved self-attention mechanism is expressed as:

(3)

whereSWr,SHr∈RHW×HrWr;SWrandSHrare the matrices of relative position logits, containing the position information of relative width and relative height.

3 Experiments

In this section, we experimentally validate the feasibility of the proposed network on the colorful fashion parsing dataset (CFPD)[20].

3.1 Experiment preparation

A graphic processing unit (GPU) was used in experiments to speed up the training of the network, and the optimization algorithm AdamW was used as an optimizer to accelerate the convergence of the network. We trained the network for 100 epochs, using an exponential learning rate scheduler with a warm-up strategy. In the training phase, a batch size of 4, an initial learning rate of 0.001, and a weight decay of 0.000 1 were used.

3.2 Experiment results

To verify the validity of the individual components and the overall framework proposed in the paper, we set up baseline and ablation experiments on the CFPD. The experimental results are shown in Table 1. Pixel accuracy (PA) and mean intersection over union (mIoU) are used as evaluation metrics. As shown in Table 1, compared with the baseline HRNet, the PA and mIoU of the MFSANet without relative position encoding (w/o RPE) increase 0.30% and 1.40%, while the PA and mIoU of the MFSANet increase 0.44% and 2.08%, respectively.

Table 1 Results of baseline and ablation experiments on the CFPD

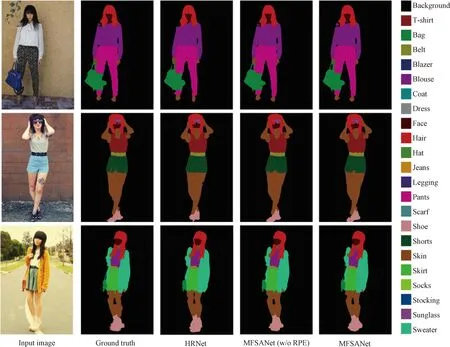

To explore the effect of the MFSANet in improving the accuracy of clothing parsing, the parsing results are visualized as shown in Fig.3. For the first example, the MFSANet can divide the blouse block above the shoulder and divide the background block between pants and blouses, confirming its powerful ability to extract details. For the second example, the MSFANet can accurately segment sunglasses between hair and faces, as well as a clear demarcation between shorts and leg skin. For the third example, the MSFANet successfully identifies the hand skin at the boundary of the sweater of the model’s left hand, demonstrating its ability to delineate the inter-class demarcation obtained by extracting association information. The MFSANet provides more consistent segmentation results for the boundary and details of image parsing, demonstrating its effectiveness and robustness.

Fig.3 Visual results on the CFPD

In Table 2, we compare the ability of the downscaling projection operation to reduce complexity for different target scales. For an input feature map withH=144 pixels andW=96 pixels, we setHrandWrto the numbers as shown in parentheses in Tabe 2. As can be seen in the second column, smaller scales correspond to fewer parameters, which demonstrates the effect of the operation on reducing the complexity. Due to the need for map building and learning of position relations in relative position encoding and the fact that this need is amplified by multiple repetitions in the structure, the method requires a smaller spatial complexity. The downscaling projection operation can adjust the memory occupation of the method to a reasonable size, which confirms the necessity of the downscaling projection operation. Therefore, the more reasonable parameters (16, 16) are used as our standard parameters.

Table 2 Comparison of different scales in downscaling projection operation

4 Conclusions

In this paper, a clothing parsing network based on multi-scale fusion and an improved self-attention mechanism is proposed. The network integrates the ability of self-attention to extract long-range association information with the overall architecture of multi-scale fusion through appropriate dimensionality reduction projection operations and incorporates two-dimensional relative position encoding to apply the rich position information in clothing images. The network proposed in this paper can effectively utilize the information from various aspects and accomplish the task of clothing parsing more accurately, thus providing help for practical garment field applications.

猜你喜欢

杂志排行

Journal of Donghua University(English Edition)的其它文章

- Laser-Induced Graphene Conductive Fabric Decorated with Copper Nanoparticles for Electromagnetic Interference Shielding Application

- Toughness Effect of Graphene Oxide-Nano Silica on Thermal-Mechanical Performance of Epoxy Resin

- Photoactive Naphthalene Diimide Functionalized Titanium-Oxo Clusters with High Photoelectrochemical Responses

- Preparation and Thermo-Responsive Properties of Poly(Oligo(Ethylene Glycol) Methacrylate) Copolymers with Hydroxy-Terminated Side Chain

- Synthesis, Characterization and Water Absorption Analysis of Highly Hygroscopic Bio-based Co-polyamides 56/66

- Design of Online Vision Detection System for Stator Winding Coil