Role of artificial intelligence in the diagnosis of oesophageal neoplasia: 2020 an endoscopic odyssey

2020-12-10MohamedHusseinJuanaGonzalezBuenoPuyalPeterMountneyLaurenceLovatRehanHaidry

Mohamed Hussein, Juana Gonzalez-Bueno Puyal, Peter Mountney, Laurence B Lovat, Rehan Haidry

Abstract The past decade has seen significant advances in endoscopic imaging and optical enhancements to aid early diagnosis. There is still a treatment gap due to the underdiagnosis of lesions of the oesophagus. Computer aided diagnosis may play an important role in the coming years in providing an adjunct to endoscopists in the early detection and diagnosis of early oesophageal cancers, therefore curative endoscopic therapy can be offered. Research in this area of artificial intelligence is expanding and the future looks promising. In this review article we will review current advances in artificial intelligence in the oesophagus and future directions for development.

Key Words: Artificial intelligence; Oesophageal neoplasia; Barrett's oesophagus; Squamous dysplasia; Computer aided diagnosis; Deep learning

INTRODUCTION

The past decade has seen significant advances in endoscopic imaging and optical enhancements to aid early diagnosis. Oesophageal cancer (adenocarcinoma and squamous cell carcinoma) is associated with significant mortality[1]. As of 2018 oesophageal cancer was ranked seventh in the world in terms of cancer incidence and mortality, with 572000 new cases[2]. Oesophageal squamous cell carcinoma accounts for more than 90% of oesophageal cancers in china with an overall 5-year survival rate less than 20%[3].

Despite the technological advances there is still a treatment gap due to the underdiagnosis of lesions of the oesophagus[4]. A metanalysis of 24 studies showed that missed oesophageal cancers are found within a year of index endoscopy in a quarter of patients undergoing surveillance for Barrett’s oesophagus (BE)[5]. A large multicentre retrospective study of 123395 upper gastrointestinal (GI) endoscopies showed an overall missed oesophageal cancer rate of 6.4%. The interval between a negative endoscopy and the diagnosis was less than 2 years in most cases[6]. Multivariate analysis showed that one of the factors associated with the miss rate is a less experienced endoscopist.

Efforts are necessary to improve the detection of early neoplasia secondary to BE and early squamous cell neoplasia (ESCN) such that curative minimally invasive endoscopic therapy can be offered to patients. Computer aided diagnosis may play an important role in the coming years in providing an adjunct to endoscopists in the early detection and diagnosis of early oesophageal cancers.

In this review article we will review current advances in artificial intelligence in the oesophagus and future directions for development.

DEFINITIONS

Machine learning is the use of mathematical models to capture structure in data[7]. The algorithms improve automatically through experience and do not need to be explicitly programmed[8]. The final trained models can be used to make prediction of oesophageal diagnosis. Machine learning is classified into supervised and unsupervised learning. During supervised learning, the model is trained with data containing pairs of inputs and outputs. It learns how to map the inputs and outputs and applies this to unseen data. In unsupervised learning the algorithm is given data inputs which are not directly linked to the outputs and therefore has to formulate its own structure and set of patterns from the inputs[9].

Deep learning is a subtype of machine learning in which the model, a neural network, is composed of several layers of neurons, similar to the human brain. This enables automatic learning of features, which is particularly useful in endoscopy where images and videos lack structure and are not easily processed into specific features[9]. A convolutional neural network (CNN) is a subtype of deep learning which can take an input endoscopic image and learn specific features (e.g., colour, size, pit pattern), process the complex information through many different layers and produce an output prediction (e.g., oesophageal dysplasia or no dysplasia) (Figure 1).

Figure 1 A deep learning model. Features of an endoscopic image processed through multiple neural layers to produce a predicted diagnosis of oesophageal cancer or no oesophageal cancer present on the image.

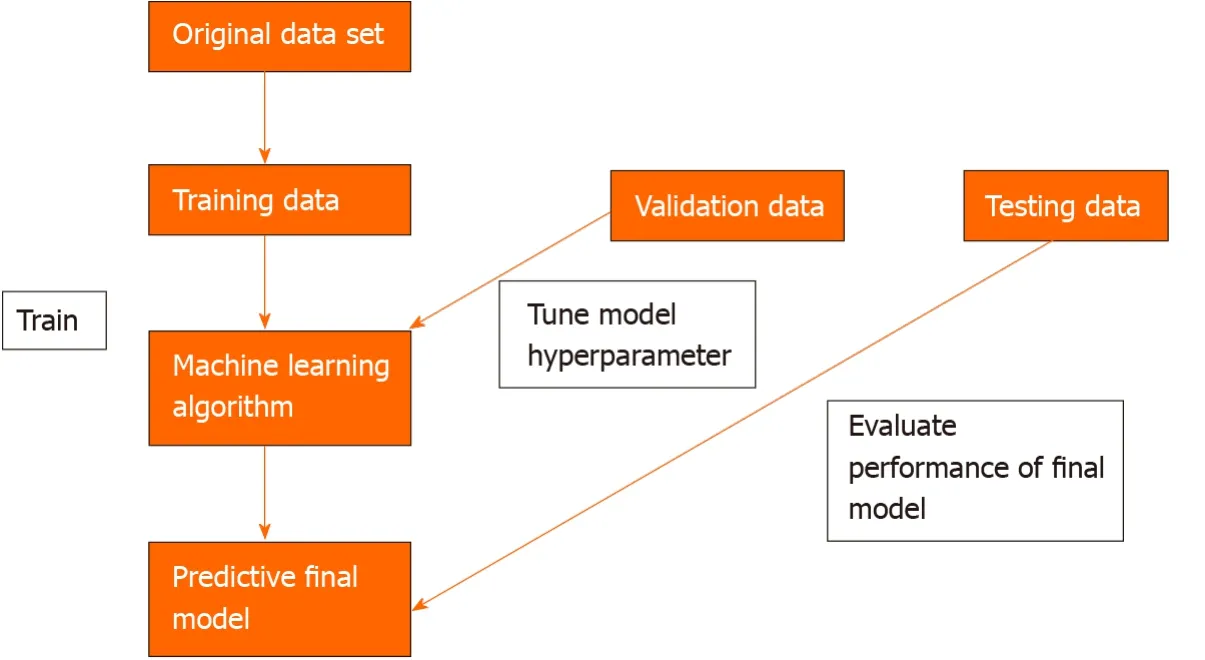

To develop a machine learning model, data needs to be split into 3 independent groups-training set, validation set and testing set. The training set is used to build a model using the oesophageal labels (e.g., dysplasia or no dysplasia). The validation set provides an unbiased evaluation of the model’s skill whilst tuning the hyperparameters of the model, for example, the number of layers in the neural network. It is used to ensure that the model is not overfitting to the training data. Overfitting means that the model will perform well on the training data but not on the unseen testing data. The test set is used to evaluate the performance of the predictive final model[7](Figure 2).

ADVANCES IN ENDOSCOPIC IMAGING

Endoscopic imaging has advanced into a new era with the development of high definition digital technology. A charge coupled device chip in standard white light endoscopy produces an image signal of 10000 to 400000 pixels displayed in a standard definition format. The chips in a high definition white light endoscope produce image signals of 850000 to 1.3 million pixels displayed in high definition[10]. This has improved our ability to pick up the most subtle oesophageal mucosal abnormalities by assessing mucosal pit patterns and vascularity to allow a timely diagnosis of dysplasia or early cancer.

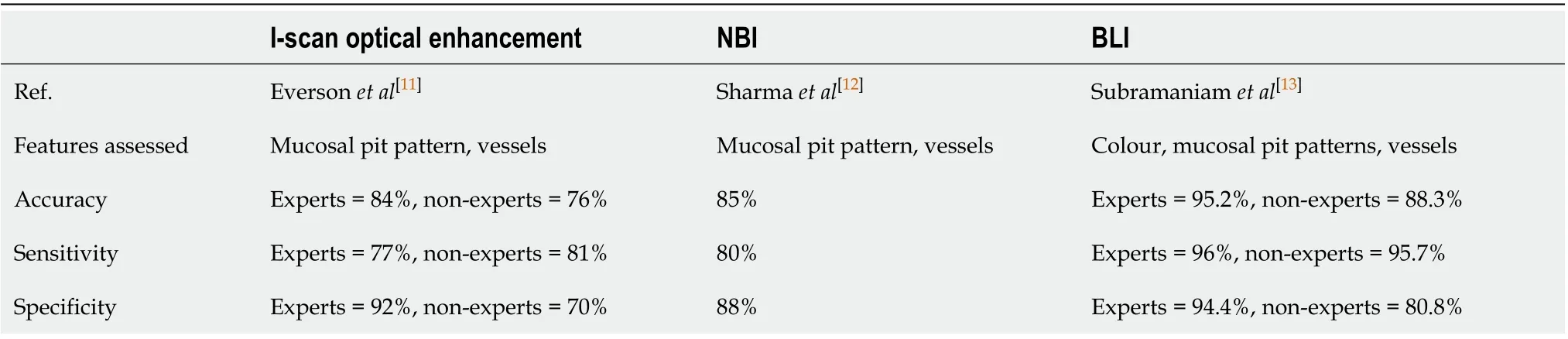

There have been further advances in optical technology in the endoscope with chromoendoscopy such as narrow-band imaging (NBI), i-scan (Pentax, Hoya) and blue laser imaging (Fujinon), which have further improved early neoplasia detection and diagnosis in the oesophagus. Table 1 summarises some of the studies investigating the accuracy of these imaging modalities in detecting BE dysplasia by formulating classification systems based on mucosal pit pattern, colour and vascular architecture.

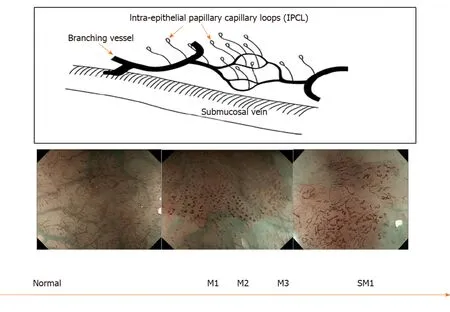

In squamous epithelium the microvascular vascular patterns of intrapapillary capillary loops (IPCL) is used to aid in the diagnosis of early squamous cell cancer (Figure 3)[14]. The classification systems that are currently used are based on magnification endoscopy assessment of IPCL patterns[15].

The disordered and distorted mucosal and vascular patterns used to define the above classifications can be used to train the CNN to detect early cancer in the oesophagus.

BE AND EARLY CANCER

BE is the only identifiable premalignant condition associated with invasive oesophageal adenocarcinoma. There is a linear progression from non-dysplastic BE, to low grade and high-grade dysplasia. Early neoplasia which is confined to the mucosa have significant eradication rates of > 80%[16].

The standard of care for endoscopic surveillance for patients with BE are random biopsies taken as part of the Seattle protocol where four-quadrant biopsies are takenevery 2 cm of BE[17]. This method is not perfect and is associated with sampling error. The area of a 2 cm segment of BE is approximately 14 cm2, a single biopsy sample is approximately 0.125 cm2. Therefore, Seattle protocol biopsies will only cover 0.5 cm2of the oesophageal mucosa which is 3.5% of the BE segment[18]. Dysplasia can often be focal and therefore easily missed. Studies have also shown that compliance with this protocol is poor and is worse on longer segments of BE[19].

Table 1 Studies showing accuracy in the detection of Barrett’s oesophagus dysplasia for each endoscopic modality

Figure 2 Three independent data sets are required to create a machine learning model that can predict an oesophageal cancer diagnosis.

The American Society for Gastrointestinal Endoscopy preservation and incorporation of valuable endoscopic innovations (PIVI) initiative was developed to direct endoscopic technology development. Any imaging technology with targeted biopsies in BE would need to achieve a threshold per patient sensitivity of at least 90% for the detection of high-grade dysplasia and intramucosal cancer. It would require a specificity of at least 80% in BE in order to eliminate the requirement for random mucosal biopsies during BE endoscopic surveillance. This would improve the cost and effectiveness of a surveillance programme. This is the minimum target an AI technology would need to meet in order to be able to be ready for prime time and a possible adjunct during a BE surveillance endoscopy[20].

An early study tested a computer algorithm developed based on 100 images from 44 patients with BE. It was trained using colour and texture filters. The algorithm diagnosed neoplastic lesions on a per image level with a sensitivity and specificity of 0.83. At the patient level a sensitivity and specificity of 0.86 and 0.87 was achieved respectively. This was the first study where a detection algorithm was developed for detecting BE lesions and compared with expert annotations[21].

A recent study developed a hybrid ResNet-UNet model computer aided diagnosis system which classified images as containing neoplastic or non-dysplastic BE with a sensitivity and specificity of 90% and 88% respectively. It achieved higher accuracy than non-expert endoscopists[22].

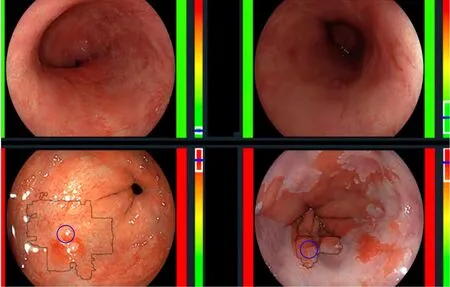

De Groofet al[23]performed one of the first studies to assess the accuracy of a computer-aided detection (CAD) system during live endoscopic procedures of 10 patients with BE Dysplasia and 10 patients without BE dysplasia. Three images were evaluated every 2 cm of BE by the CAD system. Sensitivity and specificity of the CAD system in per level analysis was 91% and 89% respectively (Figure 4).

Figure 3 Intrapapillary capillary loops patterns during magnification endoscopy to assess for early squamous cell neoplasia and depth of invasion. M1, M2, M3 = invasion of epithelium, lamina propria and muscularis propria respectively. SM1= superficial submucosal invasion. Citation: Inoue H, Kaga M, Ikeda H, Sato C, Sato H, Minami H, Santi EG, Hayee B, Eleftheriadis N. Magnification endoscopy in esophageal squamous cell carcinoma: a review of the intrapapillary capillary loop classification. Ann Gastroenterol 2015; 28: 41-48. Copyright© The Authors 2015. Published by Hellenic Society of Gastroenterology.

Husseinet al[24]developed a CNN trained using a balanced data set of 73266 frames from BE videos of 39 patients. On an independent validation set of 189436 frames from 19 patients the CNN could detect dysplasia with a sensitivity of 88.3% and specificity of 80%. The annotations were created from and tested on frames from whole videos minimising selection bias.

Volumetric laser endomicroscopy (VLE) is a wide field imaging technology used to aid endoscopists in the detection of dysplasia in BE. An infrared light produces a circumferential scan of 6cm segments of BE up to a depth of 3 mm allowing the oesophageal layer and submucosal layer with its associated vascular networks to be visualized[25]. The issue is there is large volumes of complex data which the endoscopist needs to interpret. An Artificial intelligence system called intelligent realtime image segmentation has been used to interpret the data produced from VLE. This software identifies 3 VLE features associated with histological evidence of dysplasia and displays the output with colour schemes. A hyper reflective surface (pink colour) suggests that there is increased surface maturation, cellular crowding and increased nuclear-to-cytoplasmic ratio. Hyporeflective structures (blue colour) suggests abnormal morphology of BE epithelial glands. A lack of layered architecture (orange colour) differentiates squamous epithelium from BE (Figure 5)[26]. A recent study analysed ex-vivo images from 29 BE patients with and without early cancer retrospectively. A CAD system which analysed multiple neighbouring VLE frames showed improved neoplasia detection in BE relative to single frame analysis with an AUC of 0.91[27].

Table 2 provides a summary of all the studies investigating the development of deep learning algorithms for the diagnosis of early neoplasia in BE.

ESCN

With advances in endoscopic therapy in recent years ESCN confined to the mucosal layer can be curatively resected endoscopically with a < 2% incidence of local lymph node metastasis. IPCL are the microvascular features which can be endoscopically used to help classify and identify ESCN and if there is a degree of invasion in the muscularis mucosa and submucosal tissue[16].

Lugols chromoendoscopy is a screening method for identifying ESCN during an upper GI endoscopy. However, despite a sensitivity of > 90%, it is associated with a low specificity of approximately 70%[32]. There is also a risk of allergic reaction withiodine staining. Advanced endoscopic imaging with NBI has a high accuracy for detecting ESCN however a randomised control trial showed its specificity was approximately 50%[33]. Computer assisted detection systems have been developed to try and overcome many of these issues which aid endoscopists in detecting early ESCN lesions.

Table 2 Summary of all the studies investigating the development of machine learning algorithms for the detection of dysplasia in Barrett’s oesophagus

Eversonet al[16]developed a CNN trained with 7046 sequential high definition magnification endoscopy with NBI. These were classified by experts using the IPCL patterns and based on the Japanese Endoscopic Society classification. The CNN was able to accurately classify abnormal IPCL patterns with a sensitivity and specificity of 89% and 98% respectively. The diagnostic prediction times were between 26 and 37 ms (Figure 6).

Nakagawaet al[34]developed a deep learning-based AI algorithm using over 14000 magnified and non-magnified endoscopic images from 804 patients. This was able to predict the depth of invasion of ESCN with a sensitivity of 90.1% and specificity of 95.8% (Figure 7).

Figure 4 The computer-aided detection system providing real time feedback regarding absence of dysplasia (top row) or presence of dysplasia (bottom row). Citation: de Groof AJ, Struyvenberg MR, Fockens KN, van der Putten J, van der Sommen F, Boers TG, Zinger S, Bisschops R, de With PH, Pouw RE, Curvers WL, Schoon EJ, Bergman JJGHM. Deep learning algorithm detection of Barrett's neoplasia with high accuracy during live endoscopic procedures: a pilot study (with video). Gastrointest Endosc 2020; 91: 1242-1250. Copyright© The Authors 2020. Published by Elsevier.

Guoet al[3]trained a CAD system using 6473 NBI images for real time automated diagnosis of ESCN. The deep learning model was able to detect early ESCN on still NBI images with a sensitivity of 98% and specificity of 95%. On analysis of videos the per frame sensitivity was 60.8% on non-magnified images and 96.1% on magnified images. The per lesion sensitivity was 100%. This model had high sensitivity and specificity in both still images and real time video setting the scene for the development of better models for real time detection of early ESCN.

Endocytoscopy uses a high-power fixed-focus objective lens attached to the endoscope to give ultra-high magnification images. The area of interest is stained to allow identification of cellular structures like in standard histopathology techniques. This allows the endoscopist to characterise ESCN and make a real time histological diagnosis[35].

Kumagaiet al[36]developed a CNN trained using more than 4000 endocytoscopic images of the oesophagus (malignant and non-malignant). The AI was able to diagnose esophageal squamous cell carcinoma with a sensitivity of 92.6%. This provides a potential AI tool which can aid the endoscopist by making anin vivohistological diagnosis. This would allow endoscopists to make a clinical decision in the same endoscopic session regarding resection of the early oesophageal cancer which would potentially save on costs by replacing the need for protocol biopsies

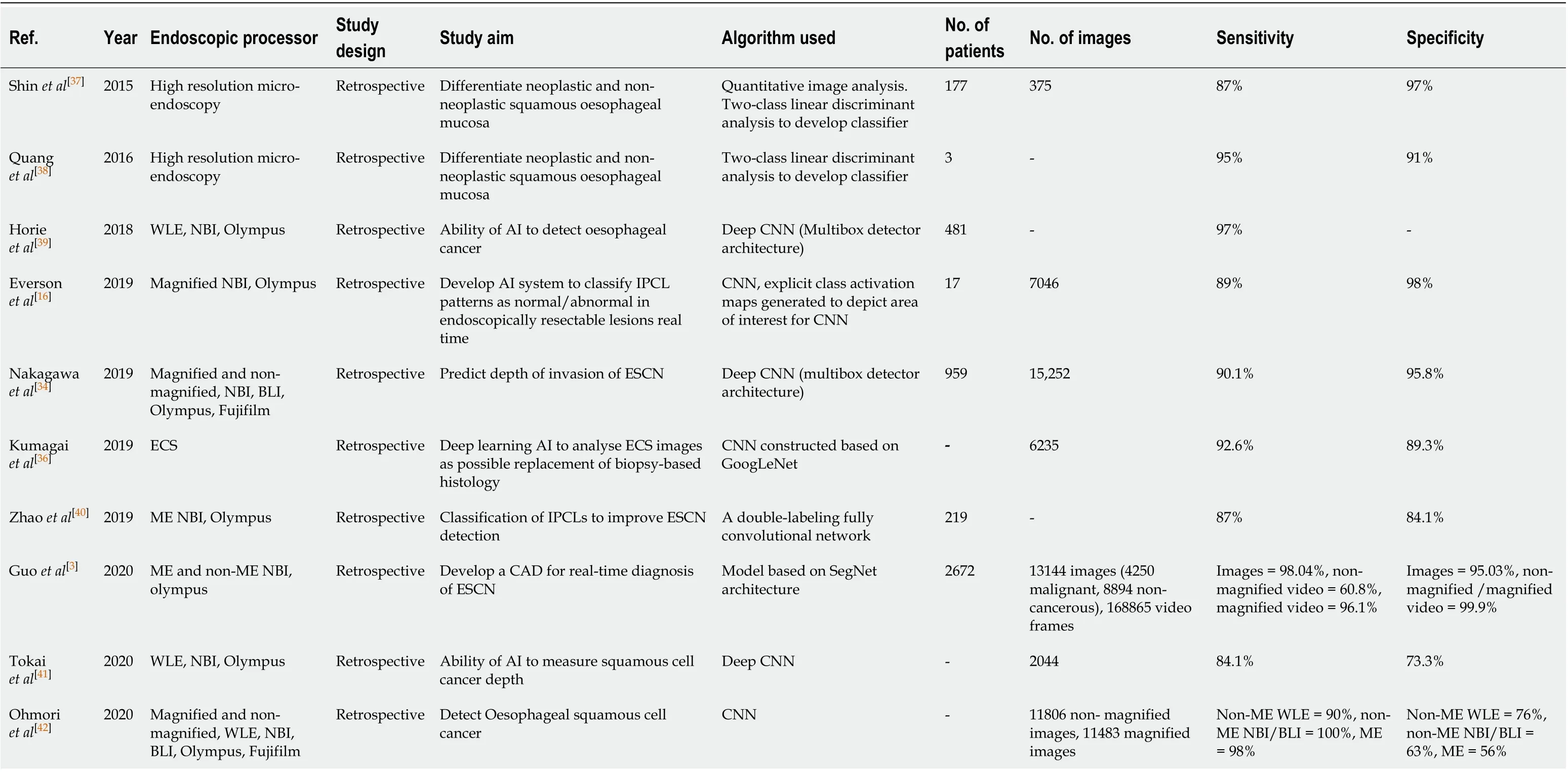

Table 3 provides a summary of all the studies investigating the development of deep learning algorithms for the diagnosis of ESCN.

AI AND HISTOLOGY ANALYSIS IN OESOPHAGEAL CANCER

In digital pathology tissue slides are scanned as high-resolution images as each slide contains a large volume of cells. The cellular structure needs be visible to the histopathologist in order to identify areas of abnormality[43]. Histopathological analysis often requires a lot of time, high costs and often manual annotation of areas of interest by the histopathologists. There is also a possible miss rate of areas of early oesophageal dysplasia as the area can be focal. There is also suboptimal interobserver agreementamong expert GI histopathologists in certain histological diagnosis such as low-grade dysplasia in BE[44].

Table 3 Summary of all the studies investigating the development of machine learning algorithms for the detection of early squamous cell neoplasia

A novel AI system to detect and delineate areas of early oesophageal cancer on histology slides could be a key adjunct to histopathologists and help improve detection and delineation of early oesophageal cancer.

Figure 5 Volumetric laser endomicroscopy image showing area of overlap (yellow arrow) between the 3 features of dysplasia identified with the colour schemes. A: View looking down into the oesophagus; B: Close up of dysplastic area; C: Forward view of the dysplastic area. A-C: Citation: Trindade AJ, McKinley MJ, Fan C, Leggett CL, Kahn A, Pleskow DK. Endoscopic Surveillance of Barrett's Esophagus Using Volumetric Laser Endomicroscopy With Artificial Intelligence Image Enhancement. Gastroenterology 2019; 157: 303-305. Copyright© The Authors 2019. Published by Elsevier.

Tomitaet al[43]developed a convolutional attention-based mechanism to classify microscopic images into normal oesophageal tissue, BE with no dysplasia, BE with dysplasia and oesophageal adenocarcinoma using 123 histological images. Classification accuracy of the model was 0.85 in the BE-no dysplasia group, 0.89 in the BE with dysplasia group, and 0.88 in the oesophageal adenocarcinoma group.

ROLE OF AI IN QUALITY CONTROL IN THE OESOPHAGUS

The inspection time of the oesophagus and clear mucosal views have an impact on the quality of an oesophagoscopy and the yield of early oesophageal neoplasia detection. Assessment should take place with the oesophagus partially insufflated between peristaltic waves. An overly insufflated oesophagus can flatten a lesion which can in turn be missed[45]. The British Society of Gastroenterology consensus guidelines on the Quality of upper GI endoscopy recommends adequate mucosal visualisation achieved by a combination of aspiration, adequate air insufflation and use of mucosal cleansing techniques. They recommend that the quality of mucosal visualisation and the inspection time during a Barrett’s surveillance endoscopy should be reported[46].

Chenet al[47]investigated their AI system, ENDOANGEL, which provides prompting of blind spots during upper GI endoscopy, informs the endoscopist of the inspection time and gives a grading score of the percentage of the mucosa that is visualised.

CONCLUSION

Computer aided diagnosis of oesophageal pathology may potentially be a key adjunct for the endoscopist which will improve the detection of early neoplasia in BE and ESCN such that curative endoscopic therapy can be offered. There are significant miss rates of oesophageal cancers despite advances in endoscopic imaging modalities and an AI tool will off-set the human factors associated with some of these miss rates.

Figure 6 Input images on the left and corresponding heat maps on the right illustrating the features recognised by the convolutional neural network when classifying images by recognising the abnormal intrapapillary capillary loops patterns in early squamous cell neoplasia. Citation: Everson M, Herrera L, Li W, Luengo IM, Ahmad O, Banks M, Magee C, Alzoubaidi D, Hsu HM, Graham D, Vercauteren T, Lovat L, Ourselin S, Kashin S, Wang HP, Wang WL, Haidry RJ. Artificial intelligence for the real-time classification of intrapapillary capillary loop patterns in the endoscopic diagnosis of early oesophageal squamous cell carcinoma: A proof-of-concept study. United European Gastroenterol J 2019; 7: 297-306. Copyright© The Authors 2019. Published by SAGE Journals.

Figure 7 Esophageal squamous cell cancer diagnosed by the artificial intelligence system as superficial cancer with SM2 invasion. A and B: Citation: Nakagawa K, Ishihara R, Aoyama K, Ohmori M, Nakahira H, Matsuura N, Shichijo S, Nishida T, Yamada T, Yamaguchi S, Ogiyama H, Egawa S, Kishida O, Tada T. Classification for invasion depth of esophageal squamous cell carcinoma using a deep neural network compared with experienced endoscopists. Gastrointest Endosc 2019; 90: 407-414. Copyright© The Authors 2019. Published by Elsevier.

At the same time its key that AI systems avoid ‘overfitting’ where it performs well on training data but underperforms when exposed to new data. It needs to be able to detect early oesophageal cancer in low- and high-quality frames during real time endoscopy. This requires high volumes of both low- and high-quality training data tested on low- and high-quality testing data to reflect the real world setting during an endoscopy.

Further research is required on the use of AI in quality control in the oesophagus in order to allow endoscopists to meet the quality indicators necessary during a surveillance endoscopy as set out in many of the international guidelines. This will ensure a minimum standard of endoscopy is met.

Research in this area of AI is expanding and the future looks promising. To fulfil this potential the following is required: (1) Further development is needed to improve the performance of AI technology in the oesophagus to detect early cancer/dysplasia in BE or ESCN during real time endoscopy; (2) High quality clinical evidence from randomised control trials; and (3) Guidelines from clinical bodies or national institutes. Once implemented this will have a significant impact on this field of endoscopy.

杂志排行

World Journal of Gastroenterology的其它文章

- Role of betaine in liver disease-worth revisiting or has the die been cast?

- Management of an endoscopy center during the outbreak of COVID-19: Experience from West China Hospital

- Gastrointestinal complications after kidney transplantation

- Is vitamin D receptor a druggable target for non-alcoholic steatohepatitis?

- Acetyl-11-keto-β-boswellic acid inhibits proliferation and induces apoptosis of gastric cancer cells through the phosphatase and tensin homolog /Akt/ cyclooxygenase-2 signaling pathway

- Endogenous motion of liver correlates to the severity of portal hypertension