Particle-filter-based walking prediction model for occlusion situations*

2013-11-01YoonchangSungWoojinChung

Yoonchang Sung, Woojin Chung

(School of Mechanical Engineering, Korea University, Seoul 136-713, Korea)

Particle-filter-based walking prediction model for occlusion situations*

Yoonchang Sung, Woojin Chung

(School of Mechanical Engineering, Korea University, Seoul 136-713, Korea)

In the field of mobile robotics, human tracking has emerged as an important objective for facilitating human-robot interaction. In this paper, we propose a particle-filter-based walking prediction model that will address an occlusion situation. Since the target being tracked is a human leg, a motion model for a leg is required. The validity of the proposed model is verified experimentally.

human-following; particle filter; motion model

0 Introduction

Human-following, combined with human-friendly technology, is currently being actively developed. Human-following can be applied in many service areas, including guide robots in a museum, nursing robots in a hospital, or porter robots in a factory.

In order to achieve human-following functionality, an estimation process is required. There are many Bayesian approaches to estimate a target of interest. A particle filter is a well-known one of these approaches. The advantages of a particle filter over a Kalman filter or extended Kalman filter are that multimodal states of a target can be represented and that nonlinear and non-Gaussian motion can be handled. A description of a particle filter can be found in Ref.[1]. Moreover, a particle filter can deal with an occlusion over a short time period. In this paper, we propose a walking prediction model that considers the occlusion situation and implement it into a particle filter.

Various sensors can be adopted to visualize a human from the viewpoint of a mobile robot. In the tracking community, Refs.[2] and [3], vision sensors and laser sensors are the most popular. In our method, we use a laser range finder (LRF) as our sensor. An LRF is able to obtain accurate distance information. The target being tracked is a human leg. A human leg is chosen, not only because obstacles are normally placed at the height of a human leg, but also because it is easy to integrate with an autonomous navigation function. There have been many human leg tracking studies, for example, Refs.[4] and [5]. Our proposed walking prediction model, therefore, is based on a leg motion.

It is very important to keep track of a target when the target person is occluded from the robot's view by an obstacle. This paper is based on our previous research[6]. In Ref.[6], to extract leg data from sensor information, a novel outlier detection method, supported by a vector data description, is described. The walking prediction model is presented and applied using a particle filter.

The rest of this paper is organized as follows. In section 1, sampling importance resampling related to a particle filter is explained briefly. Section 2 presents the proposed walking prediction model. Experimental results are shown in section 3. Section 4 concludes this paper.

1 Particle filter

Particle filtering is a Monte Carlo method that has been studied for several decades[7]. The method of particle filtering is to represent a set of random samples with associated weights for posterior probability, which is represented by

{xi(k), wi(k)}Ni=1.

Conventional particle filtering, however, has a problem of sample degeneracy that could lower the diversity of the samples. To address this problem, the use of a sampling importance resampling (SIR) filter is suggested in Refs.[8] and [9]. Due to its resampling step, the concentration of samples with large weights can be realized maintaining the diversity of samples. According to Ref.[1], the posterior probability of the particle filter at time k can be computed as

(1)

where N is the total number of samples used.

(2)

Eq.(2) presents the motion model for a leg. εkis the process noise at time k. In this manner, we implement stepped impedance resonator (SIR) filter to estimate the states of targets about legs. The next step is to define the motion model for a leg so that we can propose the walking prediction model.

2 Walking prediction model

We propose the walking prediction model as the motion model for application to an SIR filter in order to address occlusion situations.

Human walking consists of straight walking and rotating walking in a 2-D plane. According to Mochon’s research[10], we can assume that straight walking is a uniformly accelerated motion. By using previously extracted leg data, we can predict rotating walking as well.

In this paper, we consider the case when another pedestrian passes between the target person and the robot, that is, an occlusion over a short time period. The objective of the walking prediction model is to predict the motion of straight walking and that of rotating walking on next time step based on 10 time steps of extracted leg information before the occlusion occurs.

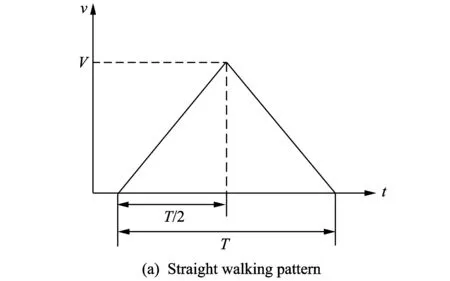

As shown in Fig.1(a), assuming that straight walking is a uniformly accelerated motion, the maximum velocity of straight walking,V, for T seconds can be computed in the following equation using the previous walking information.

(3)

Fig.1 Walking prediction model

In the case of rotating walking, previous walking information must be transformed into the local coordinate system of the time step that the target person is missed, as shown in Fig.1(b). The rotated degree can be obtained by the difference between θ1and θ2according to

θ1=arctan2((y1-y0),(x1-x0))×180/π,

θ2=arctan2((y2-y1),(x2-x1))×180/π.

(4)

For each time step, the values of Eqs.(3) and (4) are obtained for the prediction process. In other words, samples are predicted on the basis of this walking prediction model.

3 Experimental results

To evaluate the performance of our method, we experimented with a mobile robot, Pioneer 3DX, in a real-world environment. The used LRF is Sick LMS-200. The sensor frequency is set to 100 ms.

Fig.2 shows the mobile robot platform and experimental environment where another pedestrian passes between the target person and mobile robot.

Fig.2 Experimental environment for occlusion situation

Fig.3 illustrates the results of the experiment is illustrated using a Matlab simulation. The different colored marks imply different samples for the target.

In Fig.3(a), the target person is occluded by another pedestrian, and therefore the legs of the target person, at that time, cannot be detected. Consequently, samples for targets of the target person’s legs are diverged as there are no observed measurements with respect to the target person.

However, after the occlusion has occurred, as shown in Fig.3(b), the target person is rediscovered. The time difference between Fig.3(a) and Fig.3(b) is 2 s.

Fig.3 Results of the case

Fig.4 indicates the error distance of the proposed walking prediction model applied to the experiment.

Fig.4 Error distance of walking prediction model

The errors are obtained by Euclidean distance between the measurement and the center of the samples of the targets. As seen in Fig.4, at the time of the occlusion, the amount of error is increases in order to search for the target in a larger area. According to the proposed walking prediction model, it can be seen that the mobile robot platform can retrack the target person after the occlusion.

4 Conclusion

In this paper, we propose a walking prediction model using a particle filter. The proposed walking prediction model considers both straight walking and rotating walking simultaneously. We conduct experiments to show that our method can robustly deal with an occlusion over a short time period.

[1] Arulampalam M S, Maskell S, Gordon N, et al. A tutorial on particle filters for online nonlinear/non-Gaussian Bayesian tracking. IEEE Transactions on Signal Processing, 2002, 50(2): 174-188.

[2] Suzuki S, Mitsukura Y, Takimoto H, et al. A human tracking mobile-robot with face detection. In: Proceedings of the 35th Annual Conference of IEEE Industrial Electronics (IECON’09), Porto, Portugal, 2009: 4217-4222.

[3] CUI Jin-shi, ZHA Hong-bin, ZHAO Hui-jing, et al. Laser based detection and tracking of multiple people in crowds. Computer Vision and Image Understanding, 2007, 106(2/3): 300-312.

[4] SHAO Xiao-wei, ZHAO Hui-jing, Nakamura K, et al. Detection and tracking of multiple pedestrians by using laser range scanners. In: Proceedings of IEE/RSJ International Conference on Intelligence Robots and Systems, San Diego, California, USA, 2007: 2174-2179.

[5] Lee J H, Tsubouchi T, Yammamoto K, et al. People tracking using a robot in motion with laser range finder. In: Proceedings of IEE/RSJ International Conference on Intelligence Robots and Systems, Beijing, China, 2006: 2936-2942.

[6] Chung W J, Kim H Y, Yoo Y K, et al. The detection and following of human legs through inductive approaches for a mobile robot with a single laser range finder. IEEE Transactions on Industrial Electronics, 2012, 59(8): 3156-3166.

[7] Doucet A, de Freitas N, Gordon N. An introduction to sequential Monte Carlo methods// Doucet A, de Freitas N, Gordon N. Sequential Monte Carlo methods in practice. Springer-Verlag, New York, 2001.

[8] Gordon N J, Salmond D J, Smith A F M. Novel approach to nonlinear and non-Gaussian Bayesian models. In: IEE Proceedings on Radar and Signal Processing, 1993, 140(2): 107-113.

[9] Almeida A, Almeida J, Araujo R. Real-time tracking of multiple moving objects using particle filters and probabilistic data association. Automatika, 2005, 46(1/2): 39-48.

[10] Mochon S, McMahon T A. Ballistic walking. Journal of Biomechanics, 1980, 13(1): 49-57.

date: 2013-05-11

The MKE(Ministry of Knowledge Economy), Korea, under the ITRC(Information Technology Research Center) support program (NIPA-2013-H0301-13-2006) supervised by the NIPA(National IT Industry Promotion Agency); The National Research Foundation of Korea(NRF) grant funded by the Korea government(MEST) (2013-029812); The MKE(Ministry of Knowledge Economy), Korea, under the Human Resources Development Program for Convergence Robot Specialists support program supervised by the NIPA (National IT Industry Promotion Agency) (NIPA-2013-H1502-13-1001)

Woojin Chung (smartrobot@korea.ac.kr)

CLD number: TP242.6 Document code: A

1674-8042(2013)03-0263-04

10.3969/j.issn.1674-8042.2013.03.013

杂志排行

Journal of Measurement Science and Instrumentation的其它文章

- Impact of low temperature on smartphone battery consumption*

- Roll angle measurement system based oni triaxial magneto-resistive sensor*

- Dynamic test methods for natural frequency of footbridge*

- High-speed broadband data acquisition system based on FPGA*

- Method of military software security and vulnerability testing based on process mutation*

- Study on denoising filter of underwater vehicle using DWT*